Build an app with Python

/Do you have an idea for an app?

Or maybe a useful bit of code you want to share with others, but you’re not sure where to start?

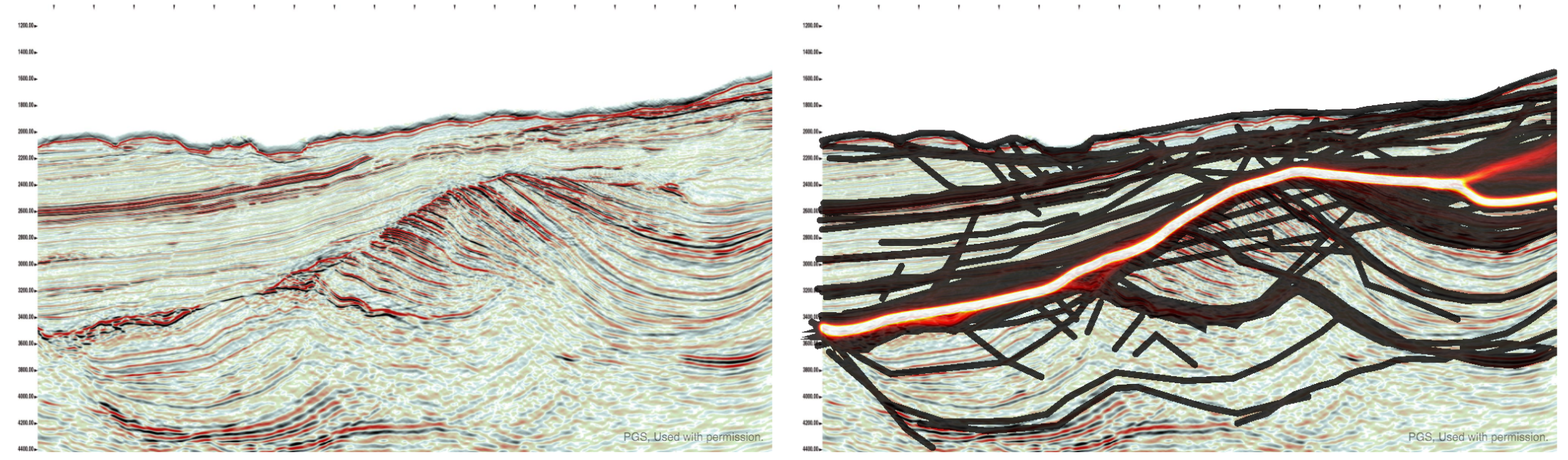

Lots of people come to our Geocomputing class — which is for outright beginners — saying, "I want to build an app". Most of them are thinking of a mobile or desktop app, but most beginners don't know much about the alternatives. Getting useful software into other people’s hands doesn’t necessarily mean making a desktop application. Alternatives include programming libraries, command line tools, and web applications with or without public machine interfacecs (so-called APIs) — and it’s hard to discover and learn about things you don’t know exist.

Now, coming up with a streamlined set of questions to figure out which kind of tool might best match your goals for ‘an app’ is probably impossible. So I gave it a try:

There’s a lot of undocumented nuance in this flowchart. For example:

There are a lot of other ways to achieve all of the things I mention in the orange boxes. I picked on some examples, but you could also make a web app — with an API — with Flask or Django. You can make a library or CLI (command line interface) tool with modules from the standard library. There are lots of ways to build a desktop app with a GUI (none of them exactly easy). Indeed, you can run a web app on the desktop in various ways.

You might be wondering, “where is ‘Build a mobile app’?” It’s not there. I don’t think building native mobile apps is usually the best idea, especially for relative beginners to Python. Web apps are easier to make, they work on any platform, and are easier to maintain. It helps if you’re online of course, but it is possible to write web apps that work offline too.

The main idea is to make something. You want to build the easiest or fastest thing that solves the problem for a few important users and use cases. Because if you can make something they will at least test a few times, you can get some awesome feedback for the next iteration — which might be a completely different thing.

So, take it with a large grain of salt! I hope it’s a tiny bit useful, and at least gives you some things to Google the next time you’re thinking about building ‘an app’.

I tweeted about this flowchart. If you want to adapt it for your own purposes, the original is here.

Thank you to Software Undergrounders Rafael, Lukas, Leo, Kent, Rob, Martin and Evan for helping me improve it. I’m still responsible for its many flaws.

Except where noted, this content is licensed

Except where noted, this content is licensed