A machine learning safety net

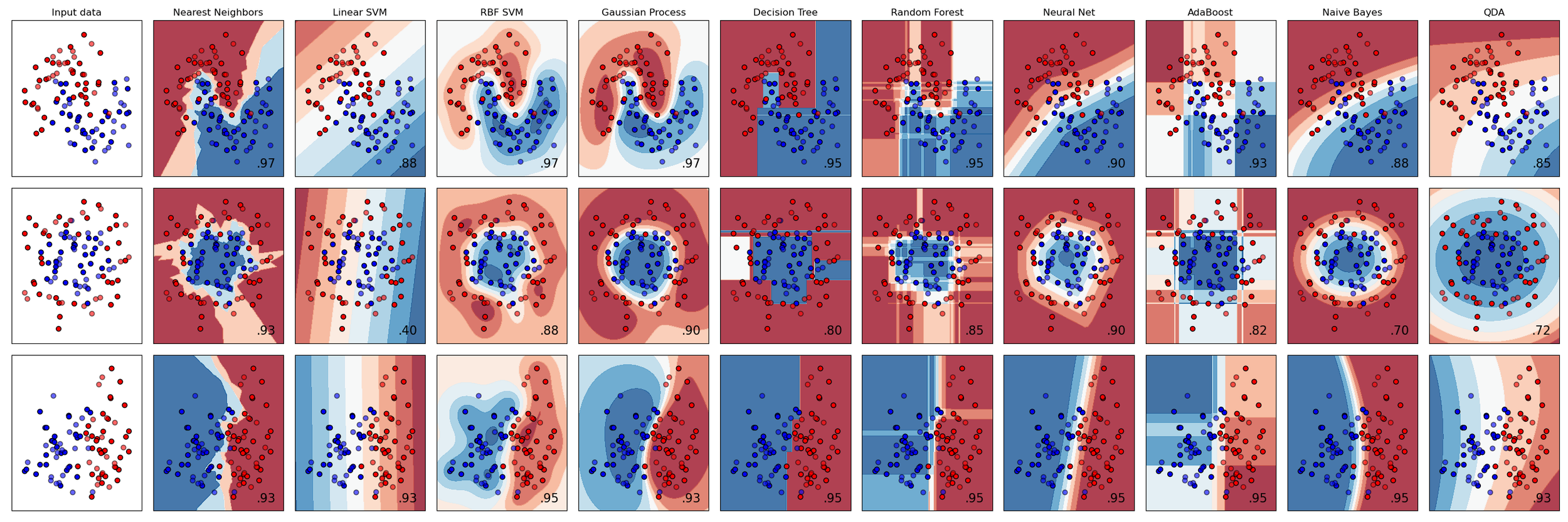

/A while back, I wrote about machine learning safety measures. I was thinking about how easy it is to accidentally make terrible models (e.g. training a support vector machine on unscaled data), or misuse good models (e.g. forgetting to scale data before making a prediction). I suggested that one solution might be to make tools that help spot these kinds of mistakes:

“[We should build] software to support good practice. Many of the problems I’m talking about are quite easy to catch, or at least warn about, during the training and evaluation process. Unscaled features, class imbalance, correlated features, non-IID records, and so on. Education is essential, but software can help us notice and act on them.”

Introducing redflag

I’m pleased, and a bit nervous, to introduce redflag, a new Python library to help find the sorts of issues I’m describing. The vision for this tool is as a kind of safety net, or ‘entrance exam for data’ (a phrase Evan coined several years ago). It should be able to look at an array (or Pandas DataFrame), and flag potential issues, perhaps generating a report. And it should be able to sit in your Scikit-Learn pipeline, watching for issues.

The current version, 0.1.9 is still rather rough and experimental. The code is far from optimal, with quite a bit of repetition. But it does a few useful things. For example, suppose we have a DataFrame with a column, Lithology, which contains strings denoting 9 rock types (‘sandstone’, ‘limestone’, etc). We’d like to know if the classes are ‘balanced’ — present in roughly similar numbers — or not. If they are not, we will have to be careful with how we split this dataset up for our model evaluation workflow.

>>> import redflag as rf >>> rf.imbalance_degree(df['Lithology']) 3.37859304086633 >>> rf.imbalance_ratio([df['Lithology']) 8.347368421052632

The imbalance degree, defined by Ortigosa-Hernandez et al. (2017), tells us that there are 4 minority classes (the next integer above this number), and that the imbalance severity is somewhere in the middle (3.1 would be well balanced, 3.9 would be strongly imbalanced). The simpler imbalance ratio tells us that there’s about 8 times as much of the biggest majority class as of the smallest minority class. Conclusion: depending on the size of this dataset, the class imbalance is probably not a show-stopper, but we need to pay attention.

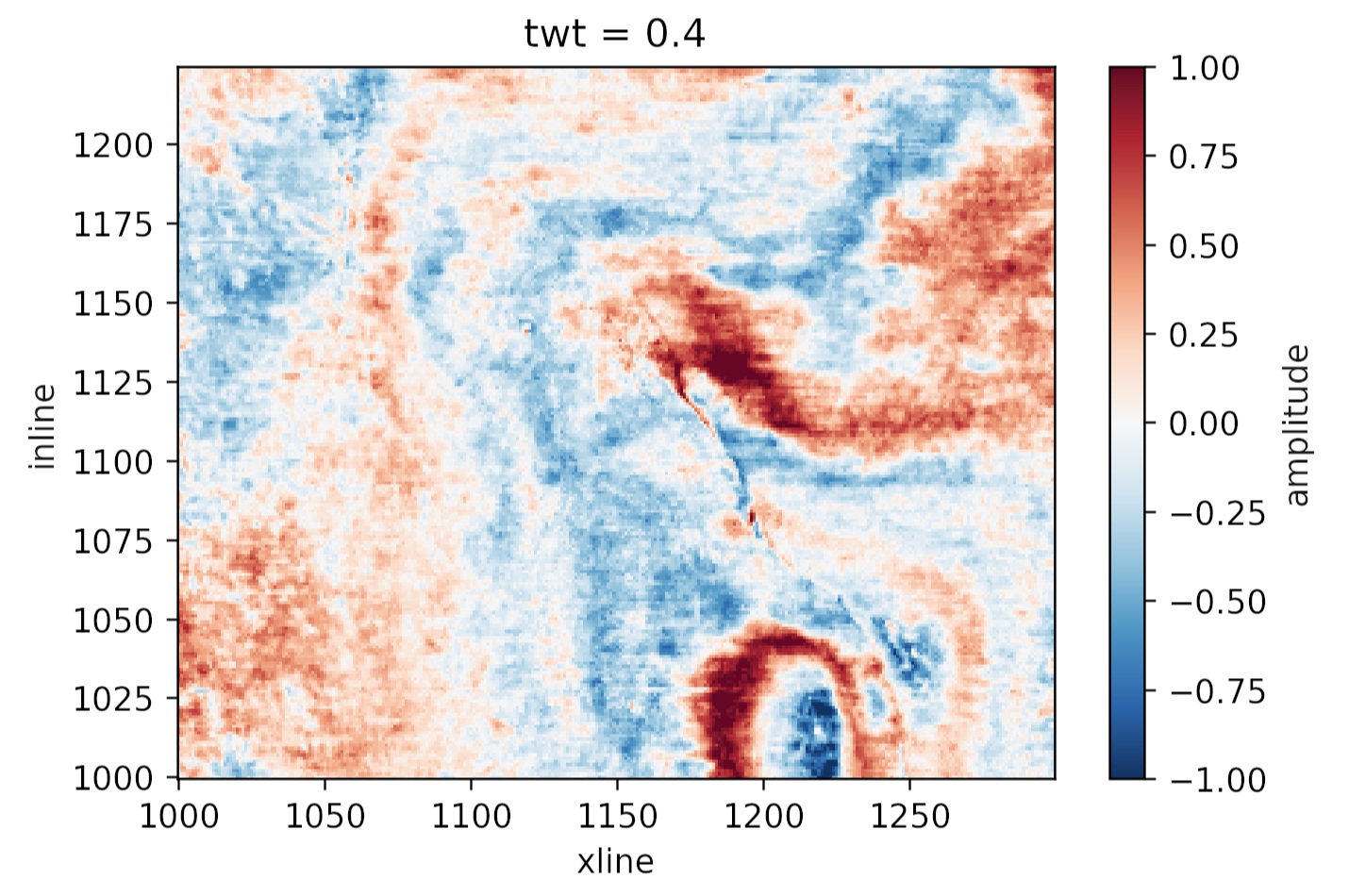

Our dataset contains well log data. Unless they are very far apart, well log samples are usually not independent — they are correlated in depth — and this means we can’t split the data randomly in our evaluation workflow. Redflag has a function to help detect features that are correlated to themselves in this way:

>>> rf.is_correlated(df['GR']) True

We need to be careful!

Another function, rf.wasserstein() computes the Wasserstein distance, aka the earth mover’s distance, between distributions. This can help us figure out if our data splits all have similar distributions or not — an important condition of our evaluation workflow. I’ll feed it 3 splits in which I have forgotten to scale the first feature (i.e. the first column) in the X_test dataset:

>>> rf.wasserstein([X_train, X_val, X_test])

array([[32.108, 0.025, 0.043, 0.034],

[16.011, 0.025, 0.039, 0.057],

[64.127, 0.049, 0.056, 0.04 ]])The large distances in the first column are the clue that the distribution of the data in this column varies a great deal between the three datasets. Plotting the distributions make it clear what happened.

Working with sklearn

Since we’re often already working with scikit-learn pipelines, and because I don’t really want to have to remember all these extra steps and functions, I thought it would be useful to make a special redflag pipeline that runs “all the things”. It’s called rf.pipeline and it might be all you need. Here’s how to use it:

from sklearn.pipeline import make_pipeline from sklearn.preprocessing import StandardScaler from sklearn.svm import SVC pipe = make_pipeline(StandardScaler(), rf.pipeline, SVC())

Here’s what this object contains:

Pipeline(steps=[('standardscaler', StandardScaler()),

('pipeline',

Pipeline(steps=[('rf.imbalance', ImbalanceDetector()),

('rf.clip', ClipDetector()),

('rf.correlation', CorrelationDetector()),

('rf.outlier', OutlierDetector()),

('rf.distributions',

DistributionComparator())])),

('svc', SVC())])Those redflag items in the inner pipeline are just detectors — think of them like smoke alarms — they do not change any data. Some of them acquire statistics during model fitting, then apply them during prediction. For example, the DistributionComparator learns the feature distributions from the training data, then compares the prediction data to them, to help ensure that you aren’t trying to extrapolate with your model. For example, it will warn you if you train a model on low-GR sandstones then try to predict on high-GR shales.

Here’s what happens when I fit my data with this pipeline:

These are just warnings, and it’s up to me to act on them. I can adjust detection thresholds and other aspects of the algorithms under the hood, but the goal is for redflag to wave its little flag, but not to get in the way. Apart from the warnings, this pipeline works exactly as it did before.

If this project sounds interesting or useful to you, please give it a look. The documentation is here, and contains more examples like those above. If you find bugs or want to request enhancements, there’s the GitHub Issues page. And if you use it for anything you can share, I’d love to hear how you get along!

Except where noted, this content is licensed

Except where noted, this content is licensed