A forensic audit of seismic data

/The SEG-Y “standard” is famously non-standard. (Those air quotes are actually part of the “standard”.)

For example, the inline and crossline location of a given trace — two things that you must have in order to load the data vaguely properly — are “recommended” (remember, it’s a “standard”) to be given in the trace’s header, at byte locations 189 and 193 respectively. Indeed, they might well be there. Or 1 and 5 (well, 5 or 9). Or somewhere else. Or not there at all.

Don Robinson at Resolve told me recently that he has seen more than 180 byte-location combinations, and he said another service company had seen more than 300.

All this can make loading seismic data really, really annoying.

I’d like to propose that the community performs a kind of forensic audit of SEG-Y files. I have 5 main questions:

What proportion of files claim to be Rev 0, Rev 1, and Rev 2? And what standard are they actually? (If any!)

What proportion of files in the wild use IBM vs IEEE floats? What about integers?

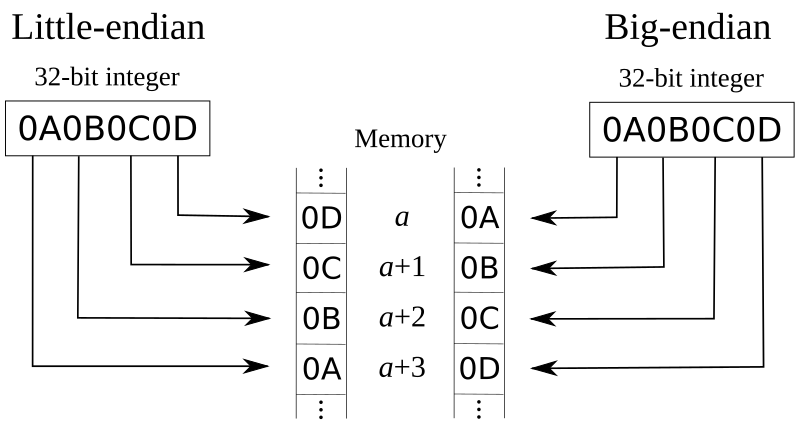

What proportion of files in the wild use little-endian vs big-endian byte order. (Please tell me there's no middle-endian data out there!)

What proportion of files in the wild use EBCDIC vs ASCII encoded textual file headers? (Again, I would hope there are no other encodings in use, but I bet there are.)

What proportion of files use the Strongly recommended and Recommended byte locations for trace numbers, sample counts, sample interval, coordinates and inline–crossline numbers?

For each of these <things> it would also be interesting to know:

How does <thing> vary with the other things? That is, what's the cross-correlation matrix?

How does <thing> vary with the age of the file? Is there a temporal trend?

How does <thing> vary with the provenance of the file? What's the geographic trend? (For example, Don told me that the prevalence of PC-based interpretation packages in Canada led to widespread early adoption of IEEE floats and little-endian byte order; indeed, he says that 90% of the SEG-Y he sees in the wild is still IBM ormatted floats!)

While we’re at it, I'd also like in some more esoteric things:

How many files have cornerpoints in the text header, and/or trace locations in trace headers?

How many files have an unambiguous CRS in the text header?

How many files have information about the processing sequence in the text header? (E.g. imaging details, filters, etc.)

How many files have incorrect information in the headers (e.g. locations, sample interval, byte format, etc)

How many processors bother putting useful things like elevation, filters, sweeps, fold at target, etc, in the trace headers?

I don’t quite know how such a survey would happen. Most of these things are obviously detectable from the files themselves. Perhaps some of the many seismic data management systems already track these things. Or maybe you’re a data manager and you have some anecdotal data you can share.

What do you think? I’d love to hear your thoughts in the comments. Maybe there’s a good hackathon project here!

Except where noted, this content is licensed

Except where noted, this content is licensed