Higher dimensions

The map is obviously two-dimensional, but it's fairly easy to conceive of 'size' in any number of dimensions. This is important, because we often deal with more than the 2 dimensions on a map, or even the 3 dimensions of a seismic stack. For example, we think of raw so-called 3D seismic data as having 5 dimensions (x position, y position, offset, time, and azimuth). We might even formulate a machine learning task with a hundred or more dimensions (or 'features').

Why do we care about measuring distances in high dimensions? When we're dealing with data in these high-dimensional spaces, 'distance' is a useful way to measure the similarity between two points. For example, I might want to select those samples that are close to a particular point of interest. Or, from among the points satisfying some constraint, select the one that's closest to the origin.

Definitions and nomenclature

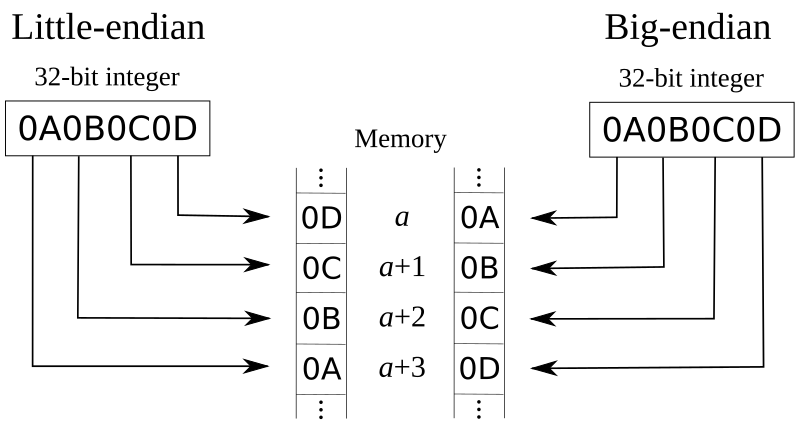

We'll define norms in the context of linear algebra, which is the study of vector spaces (think of multi-dimensional 'data spaces' like the 5D space of seismic data). A norm is a function that assigns a positive scalar size to a vector \(\mathbf{v}\) , with a size of zero reserved for the zero vector (in the Cartesian plane, the zero vector has coordinates (0, 0) and is usually called the origin). Any norm \(\|\mathbf{v}\|\) of this vector satisfies the following conditions:

Absolutely homogenous. The norm of \(\alpha\mathbf{v}\) is equal to \(|\alpha|\) times the norm of \(\mathbf{v}\).

Subadditive. The norm of \( (\mathbf{u} + \mathbf{v}) \) is less than or equal to the norm of \(\mathbf{u}\) plus the norm of \(\mathbf{v}\). In other words, the norm satisfies the triangle inequality.

Positive. The first two conditions imply that the norm is non-negative.

Definite. Only the zero vector has a norm of 0.

Kings, crows and taxicabs

Let's return to the point about lots of ways to define distance. We'll start with the most familiar definition of distance on a map— the Euclidean distance, aka the \(\ell_2\) or \(L_2\) norm (confusingly, sometimes the two is written as a superscript), the 2-norm, or sometimes just 'the norm' (who says maths has too much jargon?). This is the 'as-the-crow-flies distance' on the map above, and we can calculate it using Pythagoras:

$$ \|\mathbf{v}\|_2 = \sqrt{(a_x - b_x)^2 + (a_y - b_y)^2} $$

You can extend this to an arbitrary number of dimensions, just keep adding the squared elementwise differences. We can also calculate the norm of a single vector in n-space, which is really just the distance between the origin and the vector:

$$ \|\mathbf{u}\|_2 = \sqrt{u_1^2 + u_2^2 + \ldots + u_n^2} = \sqrt{\mathbf{u} \cdot \mathbf{u}} $$

As shown here, the 2-norm of a vector is the square root of its dot product with itself.

So the crow-flies distance is fairly intuitive... what about that awkward city block distance? This is usually referred to as the Manhattan distance, the taxicab distance, the \(\ell_1\) or \(L_1\) norm, or the 1-norm. As you can see on the map, it's just the sum of the absolute distances in each dimension, x and y in our case:

$$ \|\mathbf{v}\|_1 = |a_x - b_x| + |a_y - b_y| $$

What's this magic number 1 all about? It turns out that the distance metric can be generalized as the so-called p-norm, where p can take any positive value up to infinity. The definition of the p-norm is consistent with the two norms we just met:

$$ \| \mathbf{u} \|_p = \left( \sum_{i=1}^n | u_i | ^p \right)^{1/p} $$

[EDIT, May 2021: This generalized version is sometimes called the Minkowski distance, e.g. in the scipy documentation.]

In practice, I've only ever seen p = 1, 2, or infinity (and 0, but we'll get to that). Let's look at the meaning of the \(\infty\)-norm, aka the \(\ell_\infty\) or \(L_\infty\) norm, which is sometimes called the Chebyshev distance or chessboard distance (because it defines the minimum number of moves for a king to any given square):

$$ \|\mathbf{v}\|_\infty = \mathrm{max}(|a_x - b_x|, |a_y - b_y|) $$

In other words, the Chebyshev distance is simply the maximum element in a given vector. In a nutshell, the infinitieth root of the sum of a bunch of numbers raised to the infinitieth power, is the same as the infinitieth root of the largest of those numbers raised to the infinitieth power — because infinity is weird like that.

What about p = 0?

Infinity is weird, but so is zero sometimes. Taking the zeroeth root of a lot of ones doesn't make a lot of sense, so mathematicians often redefine the \(\ell_0\) or \(L_0\) "norm" (not a true norm) as a simple count of the number of non-zero elements in a vector. In other words, we toss out the 0th root, define \(0^0 := 0 \) and do:

$$ \| \mathbf{u} \|_0 = |u_1|^0 + |u_2|^0 + \cdots + |u_n|^0 $$

(Or, if we're thinking about the points \(\mathbf{a}\) and \(\mathbf{b}\) again, just remember that \(\mathbf{v}\) = \(\mathbf{a}\) - \(\mathbf{b}\).)

Computing norms

Let's take a quick look at computing the norm of some vectors in Python:

Except where noted, this content is licensed

Except where noted, this content is licensed