Le grand hack!

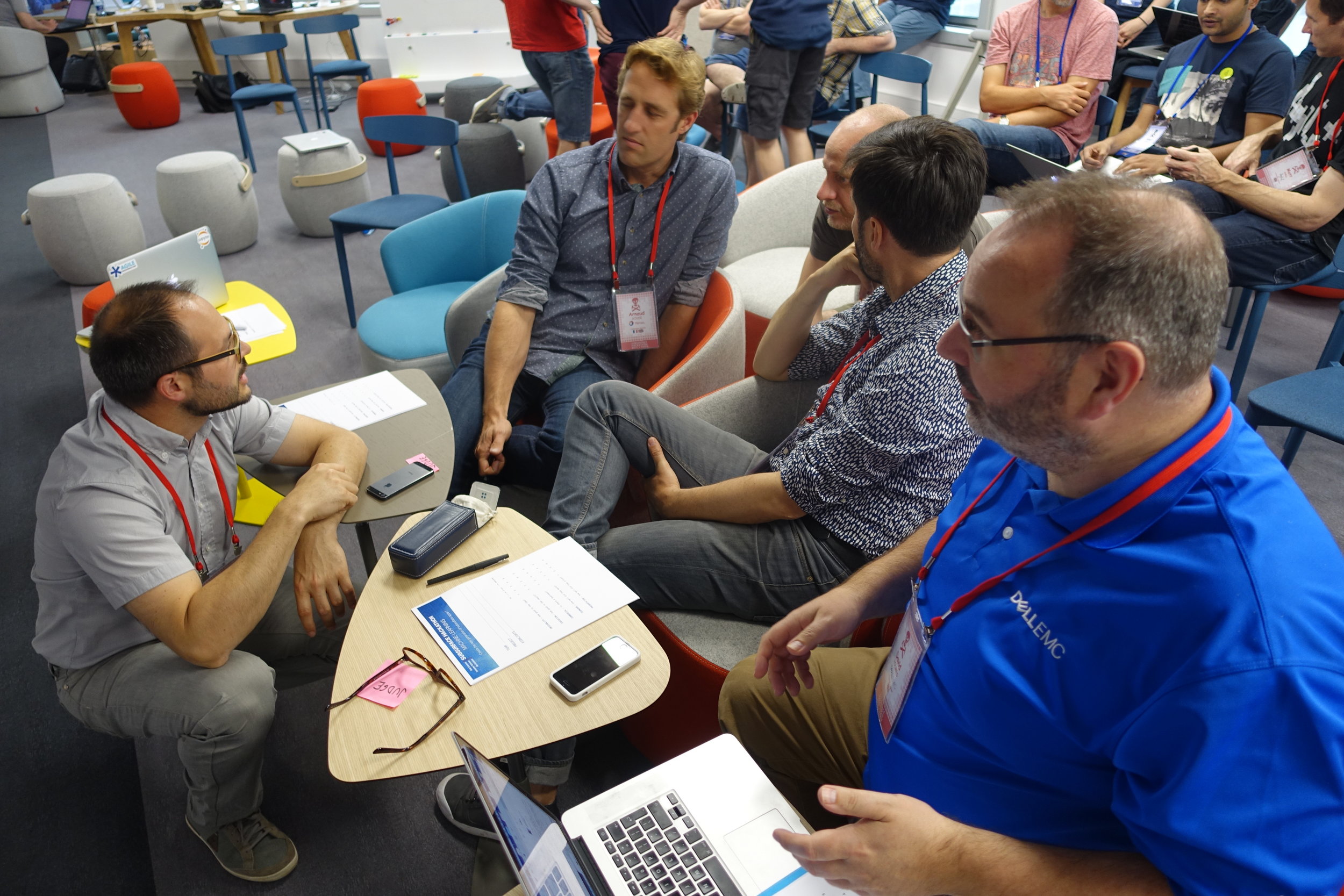

/It happened! The Subsurface Hackathon drew to a magnificent close on Sunday, in an intoxicating cloud of code, creativity, coffee, and collaboration. It will take some beating.

Nine months in gestation, the hackathon was on a scale we have not attempted before. Total E&P joined us as co-organizers and made this new reach possible. They also let us use their amazing Booster — a sort of intrapreneurship centre — which was perfect for the event. Their team (thanks especially to Marine and Caroline!) did an amazing job of hosting, as well as providing several professionals from their subsurface software (thanks Jonathan and Yannick!) and data science teams (thanks Victor and David!). Arnaud Rodde and Frédéric Broust, who had to do some organization hacking of their own to make something as weird as a hackathon happen, should be proud of their teams.

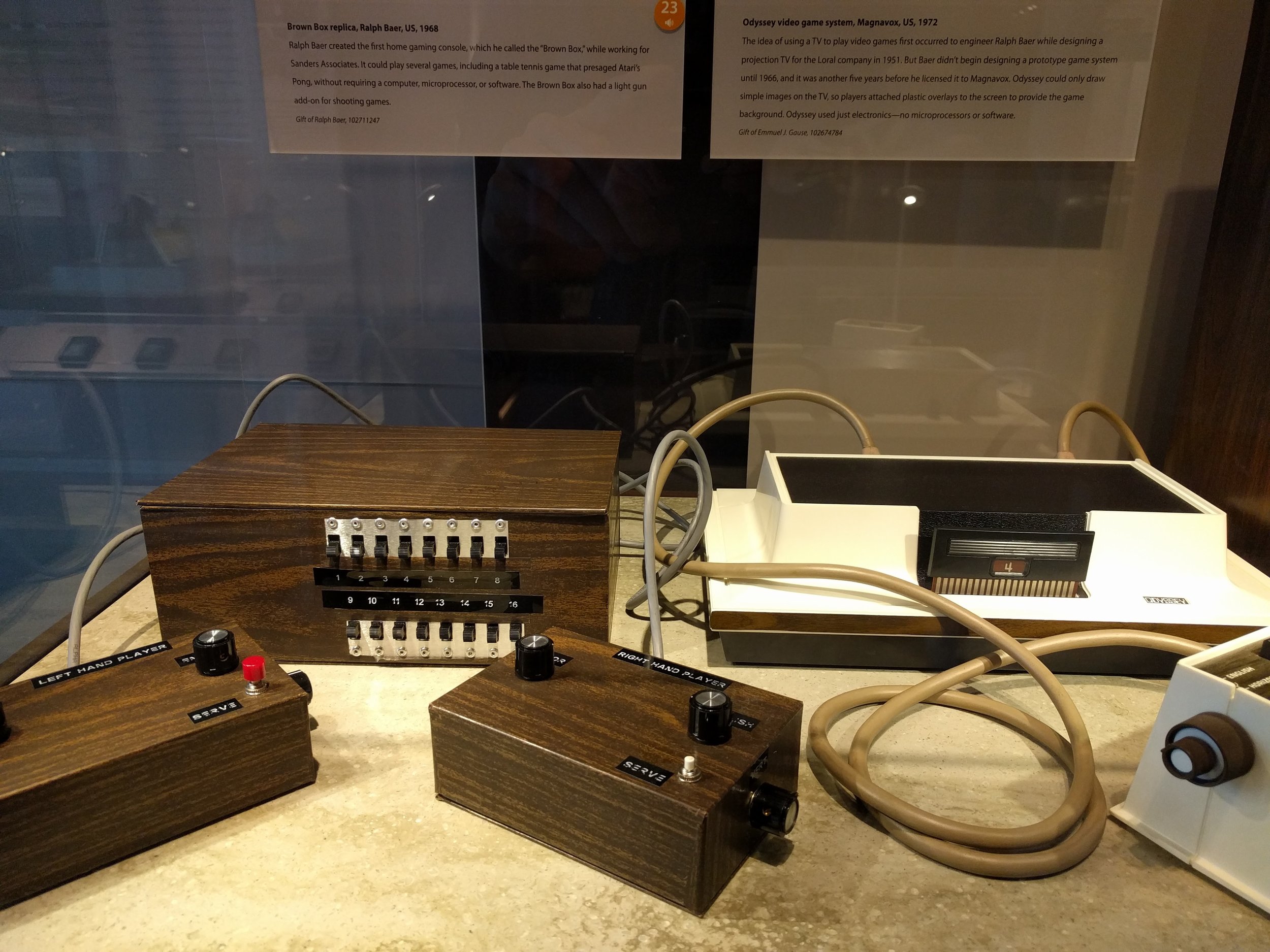

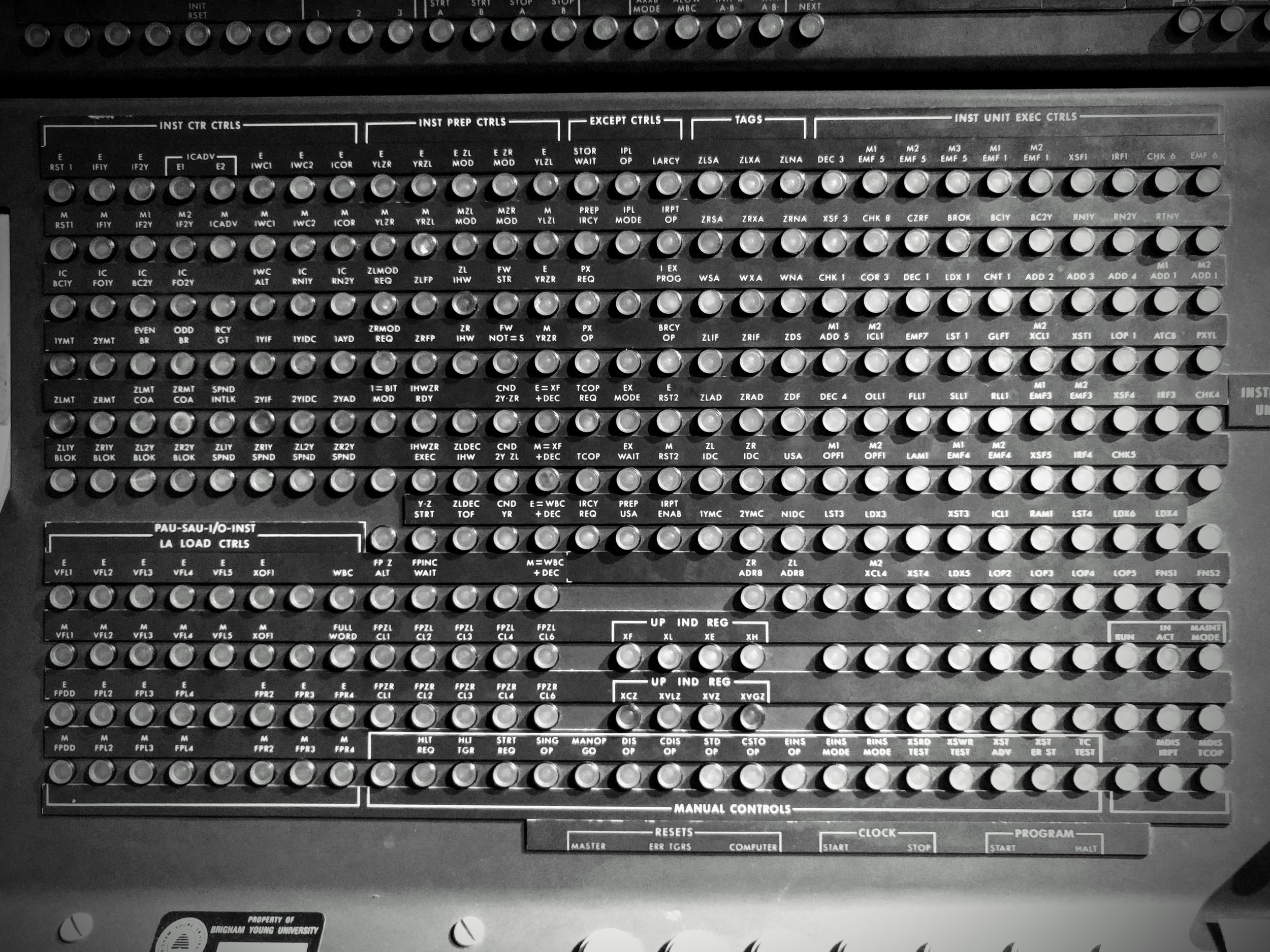

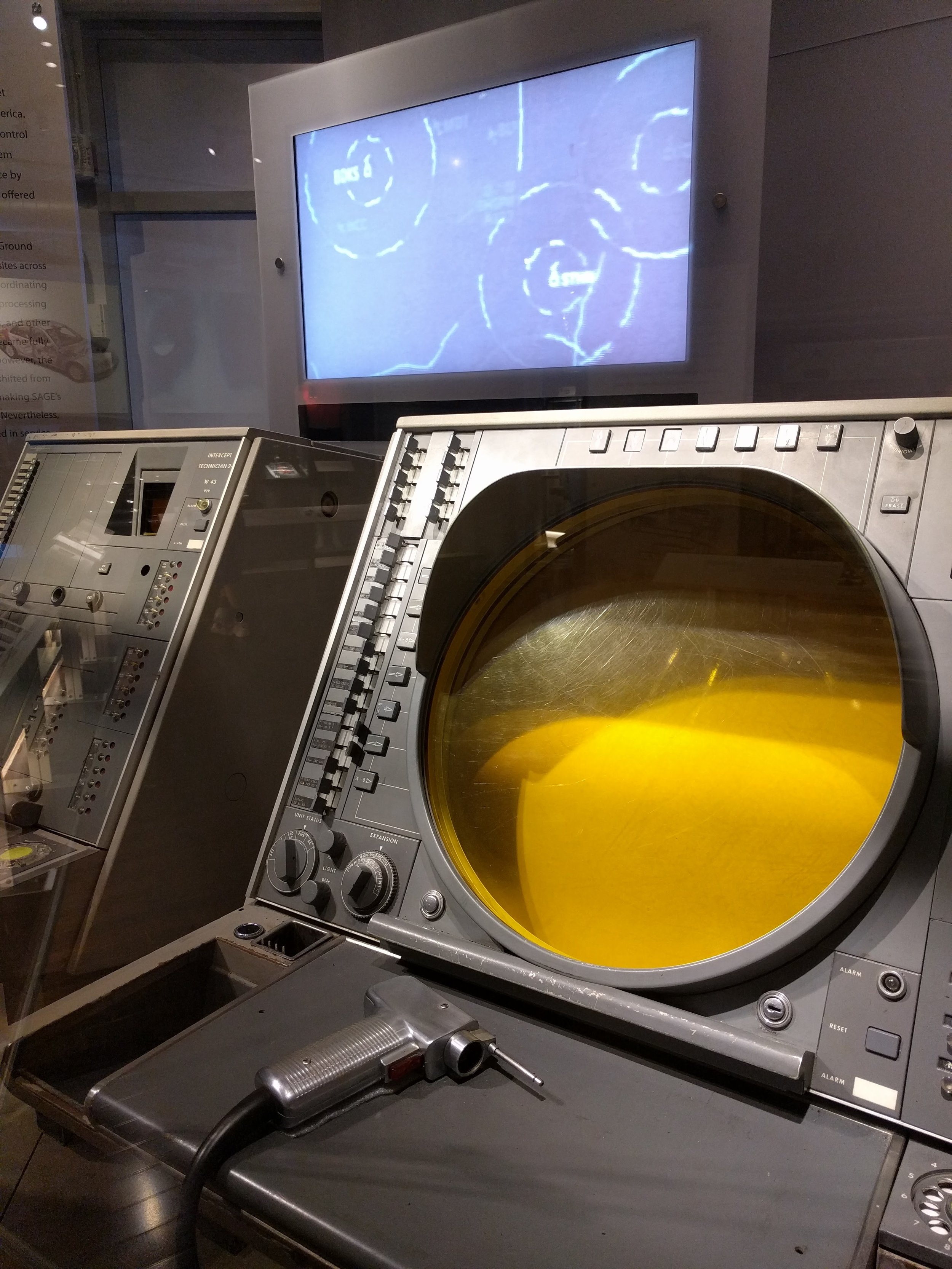

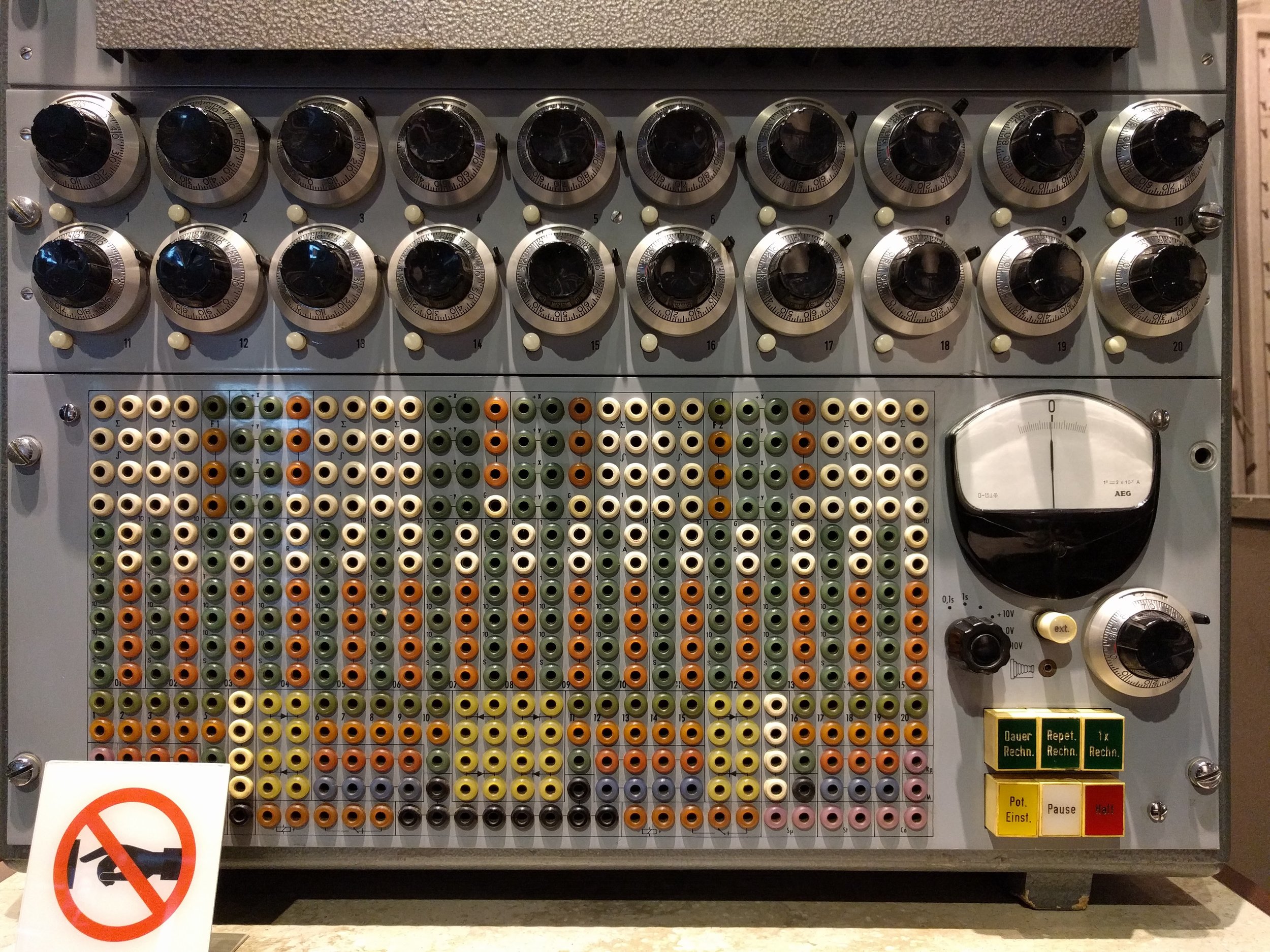

Instead of trying to describe the indescribable, here are some photos:

16 hours of code

13 teams

62 hackers

44 students

4 robots

568 croissants

0 lost-time incidents

I won't say much about the projects for now. The diversity was high — there were projects in thin section photography, 3D geological modeling, document processing, well log prediction, seismic modeling and inversion, and fault detection. All of the projects included some kind of machine learning, and again there was diversity there, including several deep learning applications. Neural networks are back!

Feel the buzz!

If you are curious, Gram and I recorded a quick podcast and interviewed a few of the teams:

It's going to take a few days to decompress and come down from the high. In a couple of weeks I'll tell you more about the projects themselves, and we'll edit the photos and post the best ones to Flickr (and in the meantime there are a few more pics there already).

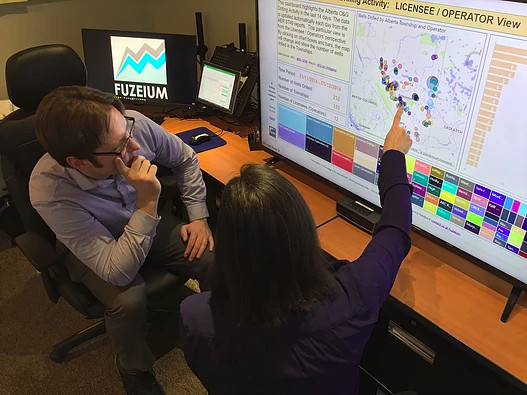

Thank you to the sponsors!

Last thing: we couldn't have done any of this without the support of Dell EMC. David Holmes has been a rock for the hackathon project over the last couple of years, and we appreciate his love of community and code! Thank you too to Duncan and Jane at Teradata, Francois at NVIDIA, Peter and Jon at Amazon AWS, and Gram at Sandstone for all your support. Dear reader: please support these organizations!

Except where noted, this content is licensed

Except where noted, this content is licensed