TRANSFORM 2019

/Yesterday I announced that we’re hatching a new plan. The next thing. Today I want to tell you about it.

The project has the codename TRANSFORM. I like the notion of transforms: functions that move you from one domain to another. Fourier transforms. Wavelet transforms. Digital subsurface transforms. Examples:

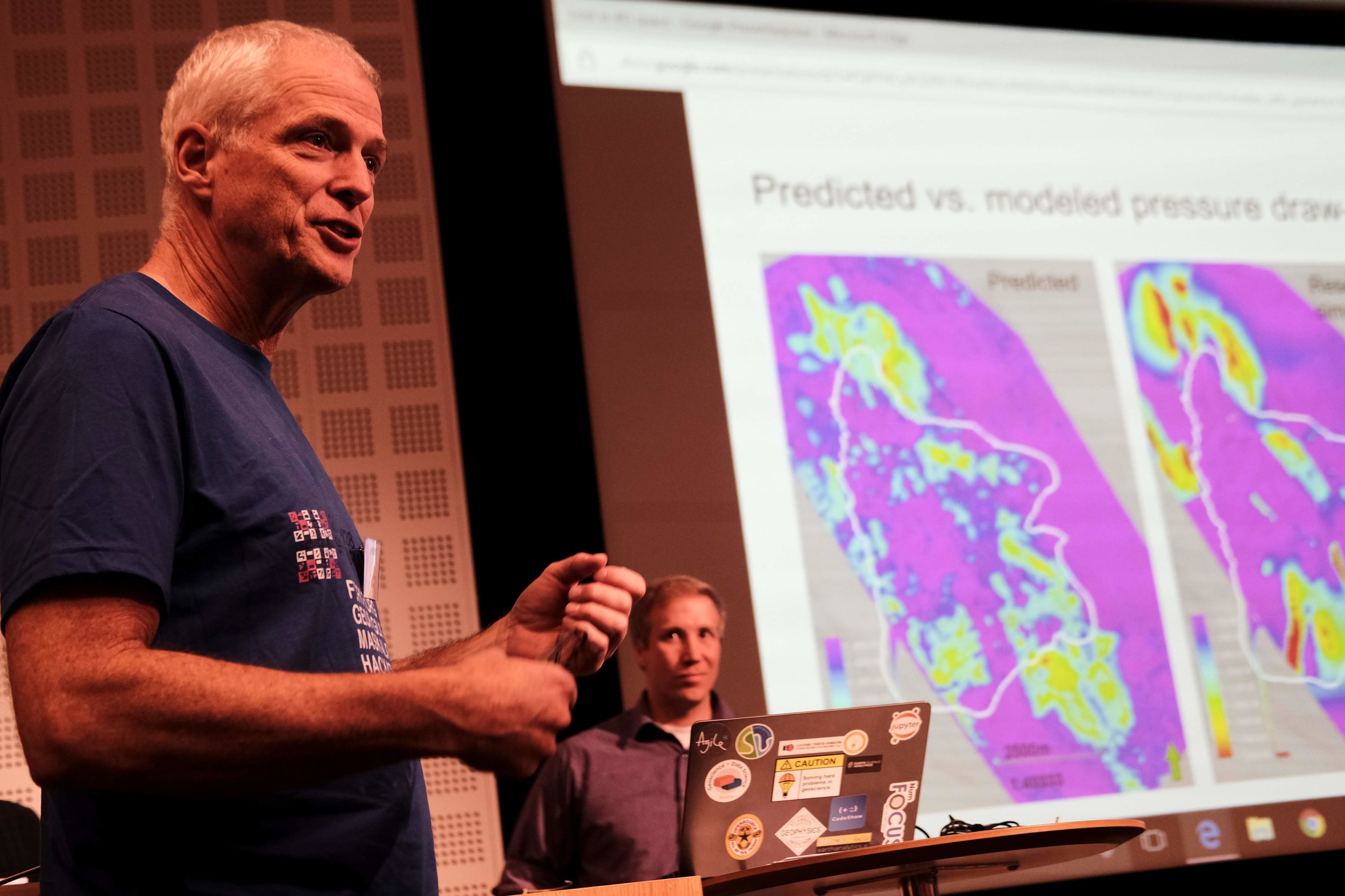

The transformative effect of open source software on subsurface science. Open source accelerates our work!

The transformative effect of collaborative, participatory events on the community. We can make new things!

The transformative effect of training on ourselves and our peers. Lots of us have new superpowers!

Together, we’ve built the foundation for a new, open software platform.

A domain shift

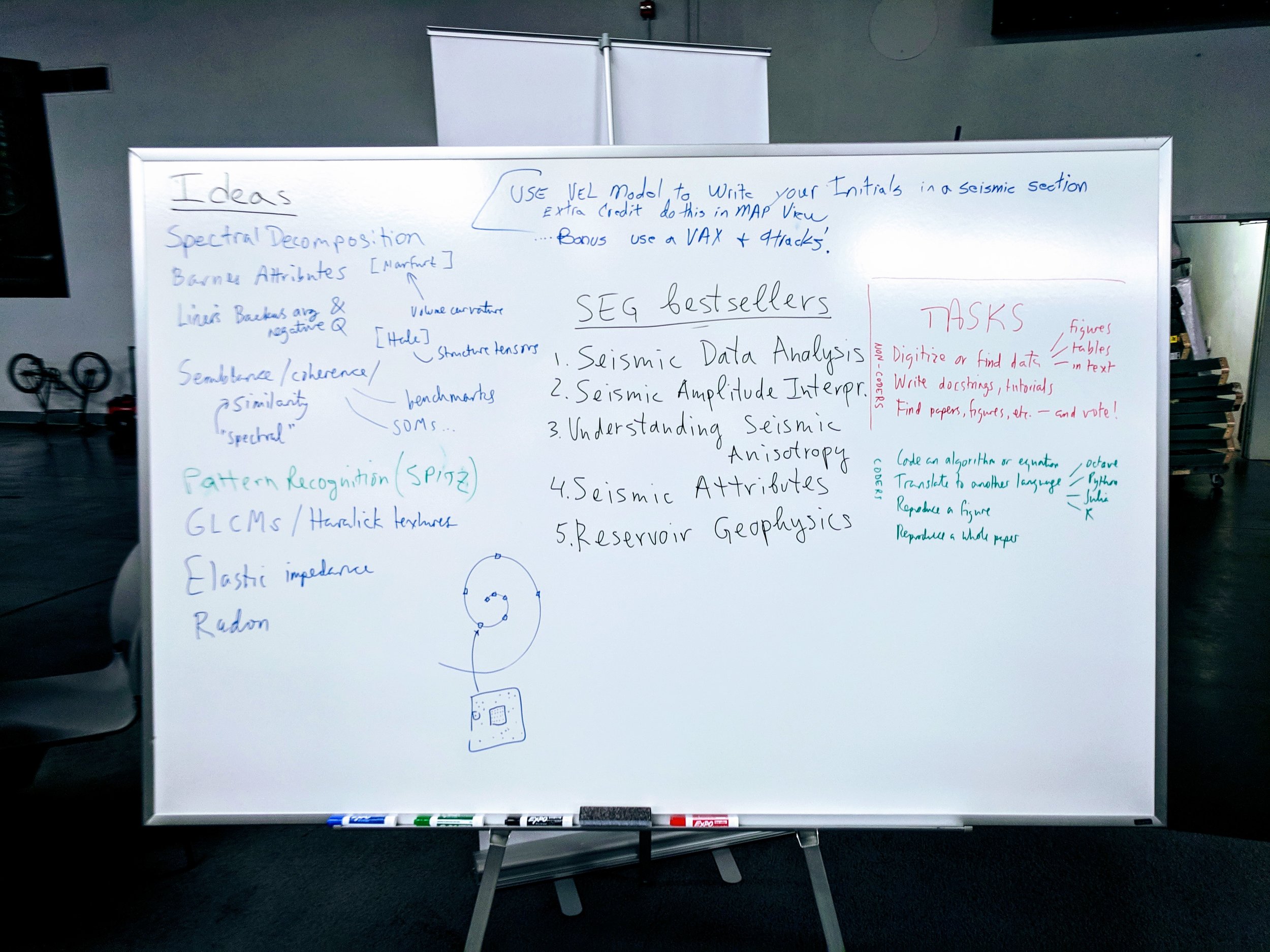

We think it’s time to refocus the hackathons as sprints — purposefully producing a sustainable, long-lasting, high quality, open source software stack that we can all use and combine into new tools, whether open or proprietary, free or commercial.

We think it’s time to bring a full-featured unconference into the mix. The half-day ‘unsessions’ open too many paths, and leave too few explored. We need more time — to share research, plan software projects, and write code.

Together, we can launch a new era in scientific computing for the subsurface.

At the core of this new era core is a new open-source software stack, created, maintained, and implemented by a community of scientists and organizations passionate about its potential.

Sign up!

Here’s the plan. We’re hosting an unconference from 5 to 11 May 2019, with full days from Monday to Friday. The event will take place at the Château de Rosay, near Rouen, France. It will be fully residential and fully catered. We have room for about 45 participants.

The goal is to lay down a road map for designing, funding, and building an open source software stack for subsurface. In the coming days and weeks, we will formulate the plan for the week, with input from the Software Underground. We want to hear from you. Propose a session! Host a sprint! Offer a bounty! There are lots of ways to get involved.

Map data: GeoBasis-DE / BKG / Google, photo: Chateauform. Click to enlarge.

If you want to be part of this effort, as a developer, an end-user, or a sponsor, then we invite you to join us.

The unconference fee will be EUR 1000, and accommodation and food will be EUR 1500. The student fees will be EUR 240 and EUR 360. There will be at least 5 bursaries of EUR 1000 available.

For the time being, we will be accepting early commitments, with a deposit of EUR 400 to secure a place (students wishing to register now should get in touch). Soon, you will be able to sign up online… we are working on a smooth process. In the meantime, click here to register your interest, share ideas for content, or sign up by paying a deposit.

Thanks for reading. We look forward to figuring this out together.

I’m delighted to be able to announce that we already have support from Dell EMC. Thanks as ever to David Holmes for his willingness to fund experiments!

In the US or Canada? Don’t despair! There will be a North American edition in Quebec in late September.

Except where noted, this content is licensed

Except where noted, this content is licensed