The abstract lead-time problem

/On Tuesday I wrote about the generally low quality of abstracts submitted to conferences. In particular, their vagueness and consequent uninterestingness. Three academics pointed out to me that there's an obvious reason.

Brian Romans (Virginia Tech) —

One issue, among many, with conference abstracts is the lead time between abstract submission and presentation (if accepted). AAPG is particularly bad at this and it is, frankly, ridiculous. The conference is >6 months from now! A couple years ago, when it was in Calgary in June, abstracts were due ~9 months prior. This is absurd. It can lead to what you are calling vague abstracts because researchers are attempting to anticipate some of what they will do. People want to present their latest and greatest, and not just recycle the same-old, which leads to some of this anticipatory language.

Chris Jackson (Imperial College London) and Zane Jobe (Colorado School of Mines) both responded on Twitter —

Also, by having abstract deadlines so far in advance of a conference, it's no surprise the abstract is vague.

— Christopher Jackson (@seis_matters) October 31, 2017

I agree that many abstracts need more meat, but big problem is that submitting an abstract for a conference 8 months away requires "vague" https://t.co/IqFU3MXYaf

— off the shelf edge (@ZaneJobe) November 1, 2017

What's the problem?

As I explained last time, most abstracts aren't fun to read. And people seem to be saying that this overlong lead time is to blame. I think they're probably right. So much of my advice was useless: you can't be precise about non-existent science.

In this light, another problem occurs to me. Writing abstracts months in advance seems to me to potentially fuel confirmation bias, as we encourage people to set out their hypothetical stalls before they've done the work. (I know people tend to do this anyway, but let's not throw more flammable material at it.)

So now I'm worried that we don't just have boring abstracts, we may be doing bad science too.

Why is it this way?

I think the scholarly societies' official line might be, "Propose talks on completed work." But let's face it, that's not going to happen, and thank goodness because it would lead to even more boring conferences. Like PowerPoint-only presentations, committees powered by Robert's Rules, and terrible coffee, year-old research is no longer good enough.

What can we do about it?

If we can't trust abstracts, how can we select who gets to present at a conference? I can't think of a way that doesn't introduce all sorts of bias or other unfairness, or is horribly prone to gaming.

So maybe the problem isn't abstracts, it's talks.

Maybe we don't need to select anything. We just need to let the research community take over the process of telling people about their work, in whatever way they want.

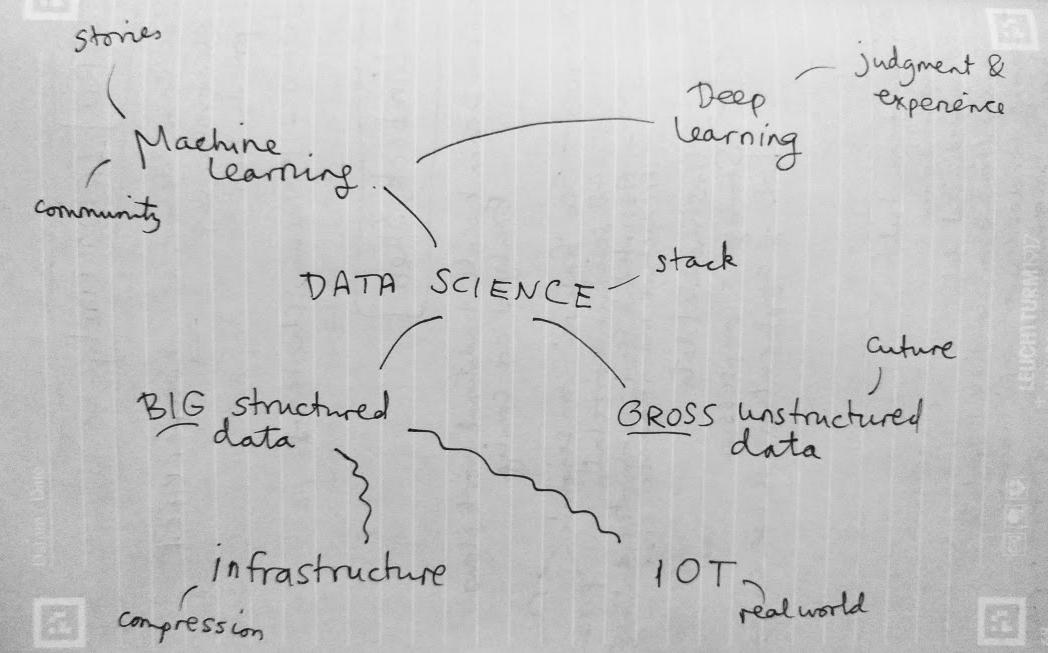

In this alternate reality, the role of the technical society is not to maintain a bunch of clunky processes to 'manage' (but not manage) the community. Instead, their role is to create the conditions for members of the community to dynamically share and progress their work. Research don't need 6 months' lead time, or giant spreadsheets full of abstracts, or broken websites (yes, I'm looking at you, Scholar One). They need an awesome space, whiteboards, Wi-Fi, AV equipment, and good coffee.

In short, maybe this is one of the nudges we need to start talking seriously about unconferences.

Except where noted, this content is licensed

Except where noted, this content is licensed