Le meilleur hackathon du monde

/Hackathons are short bursts of creative energy, making things that may or may not turn out to be useful. In general, people work in small teams on new projects with no prior planning. The goal is to find a great idea, then manifest that idea as something that (barely) works, but might not do very much, then show it to other people.

Hackathons are intellectually and professionally invigorating. In my opinion, there's no better team-building, networking, or learning event.

The next event will be 10 & 11 June 2017, right before the EAGE Conference & Exhibition in Paris. I hope you can come.

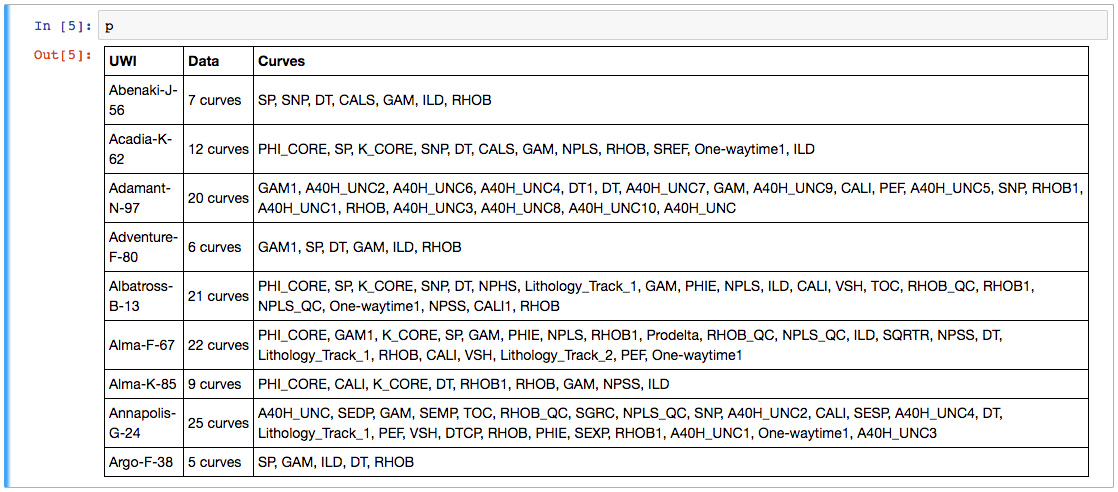

The theme for this event will be machine learning. We had the same theme in New Orleans in 2015, but suffered a bit from a lack of data. This time we will have a collection of open datasets for participants to build off, and we'll prime hackers with a data-and-skills bootcamp on Friday 9 June. We did this once before in Calgary – it was a lot of fun.

Can you help?

It's my goal to get 52 participants to this edition of the event. But I'll need your help to get there. Please share this post with any friends or colleagues you think might be up for a weekend of messing about with geoscience data and ideas.

Other than participants, the other thing we always need is sponsors. So far we have three organizations sponsoring the event — Dell EMC is stepping up once again, thanks to the unstoppable David Holmes and his team. And we welcome Sandstone — thank you to Graham Ganssle, my Undersampled Radio co-host, who I did not coerce in any way.

If your organization might be awesome enough to help make amazing things happen in our community, I'd love to hear from you. There's info for sponsors here.

If you're still unsure what a hackathon is, or what's so great about them, check out my November article in the Recorder (Hall 2015, CSEG Recorder, vol 40, no 9).

Except where noted, this content is licensed

Except where noted, this content is licensed