Survival of the fittest or overcrowding?

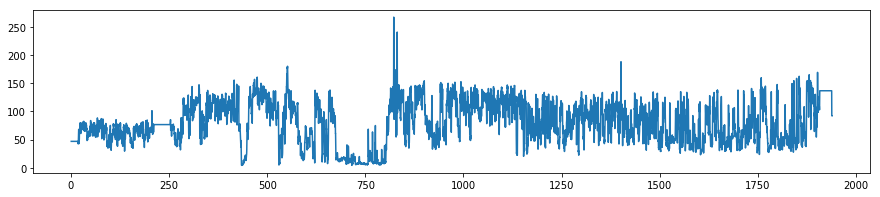

/If you’ve been involved in drilling boreholes in your career, you’ll be familiar with desurvey. Desurvey is the process of converting a directional well survey — which provides measured depth, inclination and azimuth points from the borehole — into a position log. The position log is an arbitrarily finely sampled set of (x, y, z) points along the wellbore. We need this to convert from measured depth (distance along the borehole path) to depth in the earth, which is usually given relative to mean sea-level.

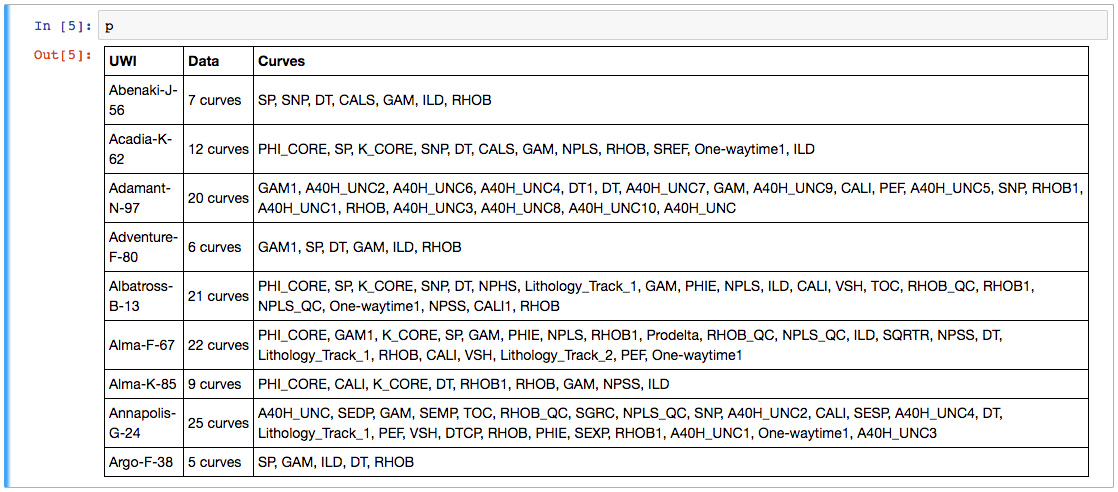

I hunted around recently and found no fewer than nine Python packages that contain desurvey code. They are of various vintages and licences:

welly — Agile, 2016, Apache 2 licence

wellpathpy — Rob Leckenby and Jørgen Kvalsvik, 2019, LGPL licence

dh2loop — Loop3D project, 2020, MIT licence

welltrajconvert — Brandon Amos, 2020, MIT licence

reservoirpy — Santiago Cuervo, 2020, MIT licence

well_profile — Pro Well Plan, 2020, LGPL licence

welleng — Jonny Maserati, 2020, Apache 2 licence

Other larger tools that contain desurvey code but not as re-usable

pygslib, a wrapper for a geostatistics package — 2015, MIT licence

geoscience, a general geology Qt application — 2019, GPL licence

This is amazing — look at all the activity in the last 2 years or so! I think this is a strong sign that we've hit a new era in geocomputing. Fifteen years ago a big chunk of the open source geoscience stuff out there was the ten-or-so seismic processing libraries. Then about 7 or 8 years ago there was a proliferation of geophysical modeling and inversion tools. Now perhaps we're seeing the geological and wells communities entering the same stage of evolution. This is encouraging.

What now?

But there’s a problem here too. — this is a small community, with limited resources. Can we afford this diversity? I accept that there might be a sort of Cambrian explosion event that has to happen with new technology. But how can we ensure that it results in an advantageous “survival of the fittest” ecology, and avoid it becoming just another way for the community to spread itself too thinly? To put it another way, how can we accelerate the evolutionary process, so that we don’t drain the ecosystem of valuable resources?

There’s also the problem of how to know which of these tools is the fittest. As far as I’m aware, there are no well trajectory benchmarks to compare against. Some of the libraries I listed above do not have rigorous tests, certainly they all have bugs (it’s software) — how can we ensure quality emerges from this amazing pool of technology?

I don’t know, but I think this is a soluble problem. We’ve done the hard part! Nine times :D

There was another sign of subsurface technology maturity in the TRANSFORM conference this year. Several of the tutorials and hackathon projects were focused on integrating tools like GemPy, Devito, segyio, and the lasio/welly/striplog family. This is exciting to see — 2021 could be an important year in the history of the open subsurface Python stack. Stay tuned!

Except where noted, this content is licensed

Except where noted, this content is licensed