Introducing Bruges

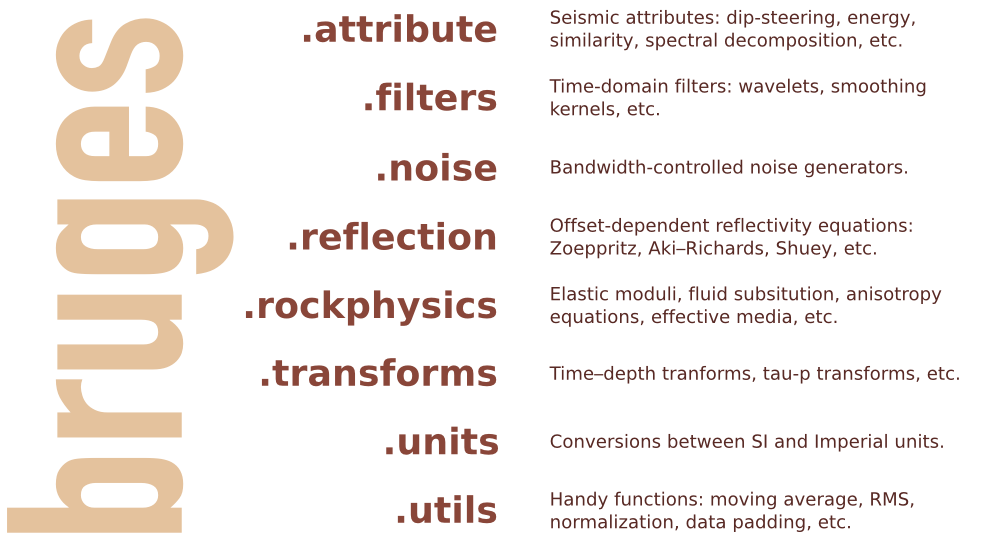

/Welcome to Bruges, a Python library (previously known as agilegeo) that contains a variety of geophysical equations used in processing, modeling and analysing seismic reflection and well log data. Here's what's in the box so far, with new stuff being added every week:

Simple AVO example

| VP [m/s] | VS [m/s] | ρ [kg/m3] | |

|---|---|---|---|

| Rock 1 | 3300 | 1500 | 2400 |

| Rock 2 | 3050 | 1400 | 2075 |

Imagine we're studying the interface between the two layers whose rock properties are shown here...

To compute the zero-offset reflection coefficient at zero offset, we pass our rock properties into the Aki-Richards equation and set the incident angle to zero:

>>> import bruges as b >>> b.reflection.akirichards(vp1, vs1, rho1, vp2, vs2, rho2, theta1=0) -0.111995777064

Similarly, compute the reflection coefficient at 30 degrees:

>>> b.reflection.akirichards(vp1, vs1, rho1, vp2, vs2, rho2, theta1=30) -0.0965206980095

To calculate the reflection coefficients for a series of angles, we can pass in a list:

>>> b.reflection.akirichards(vp1, vs1, rho1, vp2, vs2, rho2, theta1=[0,10,20,30]) [-0.11199578 -0.10982911 -0.10398651 -0.0965207 ]

Similarly, we could compute all the reflection coefficients for all incidence angles from 0 to 70 degrees, in one degree increments, by passing in a range:

>>> b.reflection.akirichards(vp1, vs1, rho1, vp2, vs2, rho2, theta1=range(70)) [-0.11199578 -0.11197358 -0.11190703 ... -0.16646998 -0.17619878 -0.18696428]

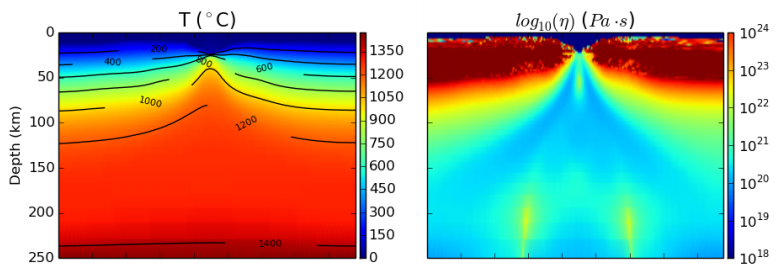

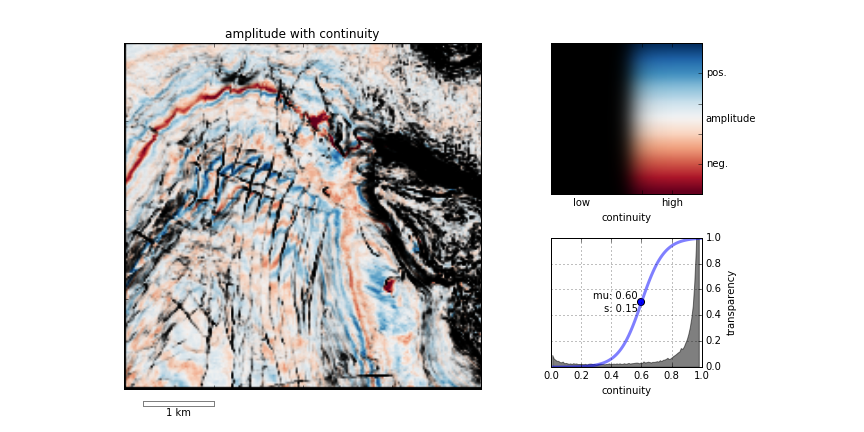

A few more lines of code, shown in the Jupyter notebook, and we can make some plots:

Elastic moduli calculations

With the same set of rocks in the table above we could quickly calculate the Lamé parameters λ and µ, say for the first rock, like so (in SI units),

>>> b.rockphysics.lam(vp1, vs1, rho1), b.rockphysics.mu(vp1, vs1, rho1) 15336000000.0 5400000000.0

Sure, the equations for λ and µ in terms of P-wave velocity, S-wave velocity, and density are pretty straightforward:

but there are many other elastic moduli formulations that aren't. Bruges knows all of them, even the weird ones in terms of E and λ.

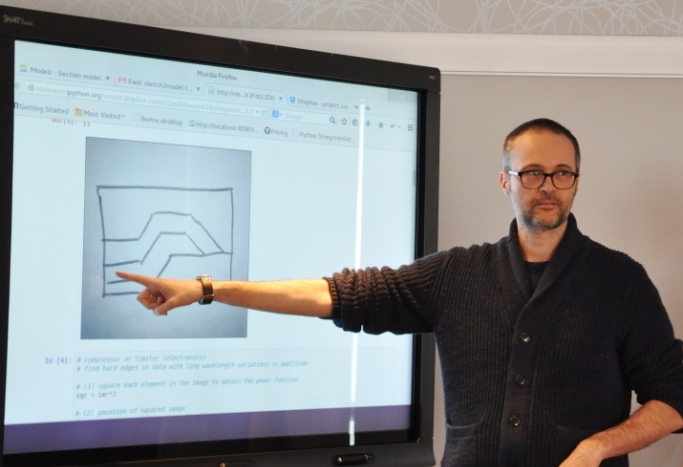

All of these examples, and lots of others — Backus averaging, examples are available in this Jupyter notebook, if you'd like to work through them on your own.

Bruges is a...

It is very much early days for Bruges, but the goal is to expose all the geophysical equations that geophysicists like us depend on in their daily work. If you can't find what you're looking for, tell us what's missing, and together, we'll make it grow.

What's a handy geophysical equation that you employ in your work? Let us know in the comments!

Except where noted, this content is licensed

Except where noted, this content is licensed