More ways to make models

/A few weeks ago I wrote about a new feature in bruges for making wedge models. This new feature makes it really easy to make wedge models, for example:

import bruges as bg import matplotlib.pyplot as plt strat = [(0, 1, 0), (2, 3, 2, 3, 2), (4, 5, 4)] wedge, *_ = bg.models.wedge(strat=strat, conformance=’top’) plt.imshow(wedge)

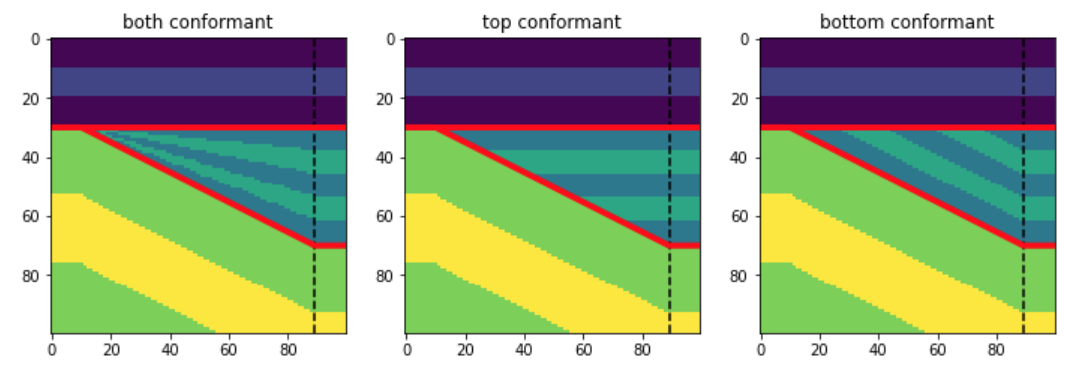

And here are some examples of what this will produce, depending on the conformance argument:

What’s new

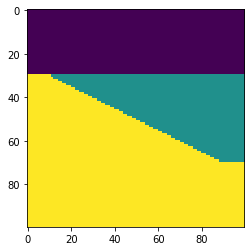

I thought it might be interesting to be able to add another dimension to the wedge model — in and out of the screen in the models shown above. In that new dimension — as long as there are only two rock types in there — we could vary the “net:gross” of the wedge.

So if we have two rock types in the wedge, let’s say 2 and 3 as in the wedges shown above, we’ll end up with a lot of different wedges. At one end of the new dimension, we’ll have a wedge that is all 2. At the other end, it’ll be all 3. And in between, there’ll be a mixture of 2 and 3, with the layers interfingering in a geometrically symmetric way.

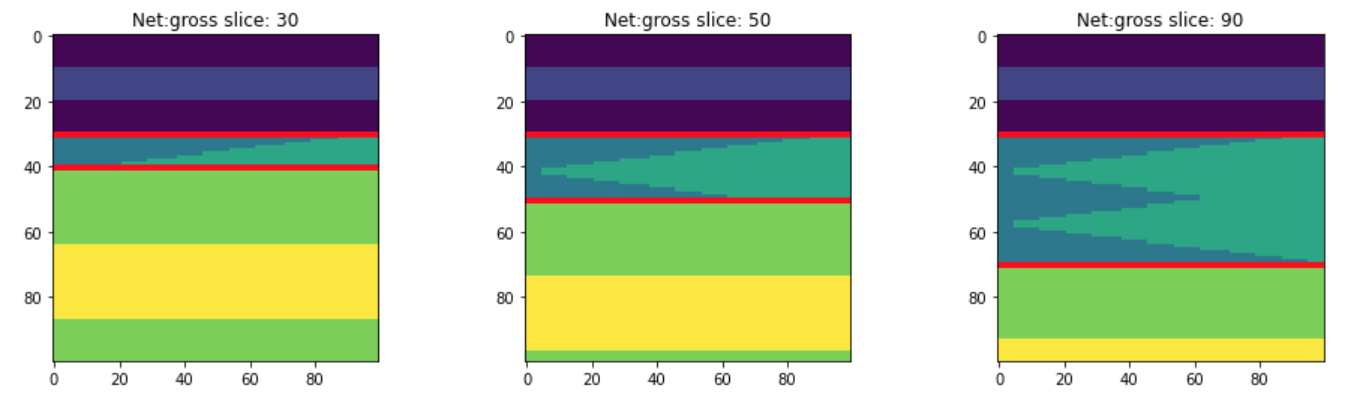

Let’s look at those 3 slices in the central model shown above:

We can also see slices in that other dimension, to visualize the net:gross variance across the model. These slices are near the thin end of the wedge, the middle, and the thick end:

To get 3D wedge models like this, just make a binary (2-component) wedge and add breadth=100 (the number of slices in the new dimension).

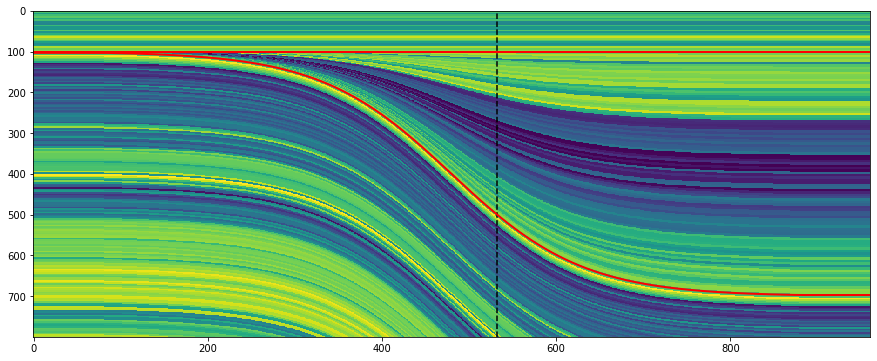

These models are admittedly a little mind-bending. Here’s what slices in all 3 orthogonal directions look like, along with (very badly) simulated seismic through this 3D wedge model:

New wedge shapes!

As well as the net-to-gross thing, I added some new wedge shapes, so now you can have some steep-sided alternatives to the linear and sigmoidal ramps I had in there originally. In fact, you can pass in a wedge shape function of your own, so there’s no end to what you could implement.

You can read about these new features in this notebook. Please note that you will need the latest version of bruges to use these new features, so run pip install —upgrade bruges in your environment, then you’ll be all set. Share your models, I’d love to see what you make!

Except where noted, this content is licensed

Except where noted, this content is licensed