Well-tie workflow

/We've had a couple of emails recently about well ties. Ever since my days as a Landmark workflow consultant, I've thought the process of calibrating seismic data to well data was one of the rockiest parts of the interpretation workflow—and not just because of SynTool. One might almost call the variety of approaches an unsolved problem.

Tying wells usually involves forward modeling a synthetic seismogram from sonic and density logs, then matching that synthetic to the seismic reflection data, thus producing a relationship between the logs (measured in depth) and the seismic (measured in travel time). Problems arise for all sorts of reasons: the quality of the logs, the quality of the seismic, confusion about handling the shallow section, confusion about integrating checkshots, confusion about wavelets, and the usability of the software. Like much of the rest of interpretation, there is science and judgment in equal measure.

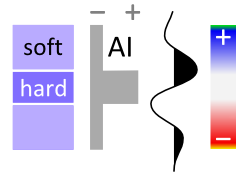

Synthetic seismogram (right) from the reservoir section of the giant bitumen field Surmont, northern Alberta. The reservoir is only about 450 m deep, and about 70 m thick. From Hall (2009), Calgary GeoConvention.

I'd go so far as to say that I think tying wells robustly is one of the unsolved problems of subsurface geoscience. How else can we explain the fact that any reasonably mature exploration project has at least 17 time-depth curves per well, with names like JLS_2002_fstk01_edit_cks_R24Hz_final?

My top tips

First, read up. White & Simm (2003) in First Break21 (10) is excellent. Rachel Newrick's essays in 52 Things are essential. Next, think about the seismic volume you are trying to tie to. Keep it to the nears if possible (don't use a full-angle stack unless it's all you have). Use a volume with less filtering if you have it (and you should be asking for it). And get your datums straight, especially if you are on land: make certain your seismic datum is correct. Ask people, look at SEGY headers, but don't be satisfied with one data point.

Once that stuff is ironed out:

- Chop any casing velocities or other non-data off the top of your log.

- Edit as gently and objectively as possible. Some of those spikes might be geology.

- Look at the bandwidth of your seismic and make an equivalent zero-phase wavelet.

- Don't extract a wavelet till you have a few good ties with a zero-phase wavelet, then extract from several wells and average. Extracting wavelets is a whole other post...

- Bulk shift the synthetic (e.g. by varying the replacement velocity) to make a good shallow event tie.

- Stretch (or, less commonly, squeeze) the bottom of the log to match the deepest event you can.

- If possible, don't add any more tie points unless you really can't help yourself. Definitely no more than 5 tie points per well, and no closer than a couple of hundred milliseconds.

- Capture all the relevant data for every well as you go (screenshot, replacement velocity, cross-correlation coefficient, residual phase, apparent frequency content).

- Be careful with deviated wells; you might want to avoid tying the deviated section entirely and use verticals instead. If you go ahead, read your software's manual. Twice.

- Do not trust any checkshot data you find in your project — always go back to the original survey (they are almost always loaded incorrectly, mainly because the datums are really confusing).

- Get help before trying to load or interpret a VSP unless you really know what you are doing.

I could add some don'ts too...

- Don't tie wells to 2D seismic lines you have not balanced yet, unless you're doing it as part of the process of deciding how to balance the seismic.

- Don't create multiple, undocumented, obscurely named copies or almost-copies of well logs and synthetics, unless you want your seismic interpretation project to look like every seismic interpretation project I've ever seen (you don't).

Well ties are one of those things that get in the way of 'real' (i.e. fun) interpretation so they sometimes get brushed aside, left till later, rushed, or otherwise glossed over. Resist at all costs. If you mess them up and don't find out till later, you will be very sad, but not as sad as your exploration manager.

Update

on 2013-04-27 13:25 by Matt Hall

Can't resist posting this most excellent well tie. Possibly the best you'll ever see.

Picture by Maitri, licensed CC-BY-NC-SA

Update

on 2014-07-04 13:53 by Matt Hall

Evan has written a deconstructed well-tie workflow, complete with IPython Notebook for you to follow along with, for The Leading Edge. Read Well-tie calculus here.

Except where noted, this content is licensed

Except where noted, this content is licensed