Finding Big Bertha with a hot wire

/Sunday will be the 131st birthday of General Andrew McNaughton, a Canadian electrical engineer who served in the Canadian Expeditionary Force in the First World War. He was eventually appointed commander of the Canadian Corps Heavy Artillery and went on to serve in the Second World War as well.

So what is a professional soldier doing on a blog about geoscience? Well, McNaughton was part of the revolution of applied acoustics and geophysics that emerged right before and after the First World War.

Along with eminent British physicist Lawrence Bragg, engineer William Sansome Tucker, and physicist Charles Galton Darwin (the other Charles Darwin's grandson), among others, McNaughton applied physics to the big problem of finding the big noisy things that were trying to blow everyone up. They were involved in an arms race of their own — German surveyor Ludger Mintrop was trying to achieve the same goal from the other side of the trenches.

After gaining experience as a gunner, McNaughton became one of a handful of scientists and engineers involved in counter-battery operations. Using novel ranging techniques, these scientists gave the allied forces a substantial advantage over the enemy. Counter-battery fire became an weapon at pivotal battles like Vimy Ridge, and certainly helped expedite the end of the war.

If all this sounds like a marginal way to win a battle, stop think for a second about these artillery. The German howitzer, known as 'Big Bertha' (left), could toss an 820 kg (1800 lb) shell about 12.5 km (7.8 miles). In other words, it was incredibly annoying.

Combining technologies

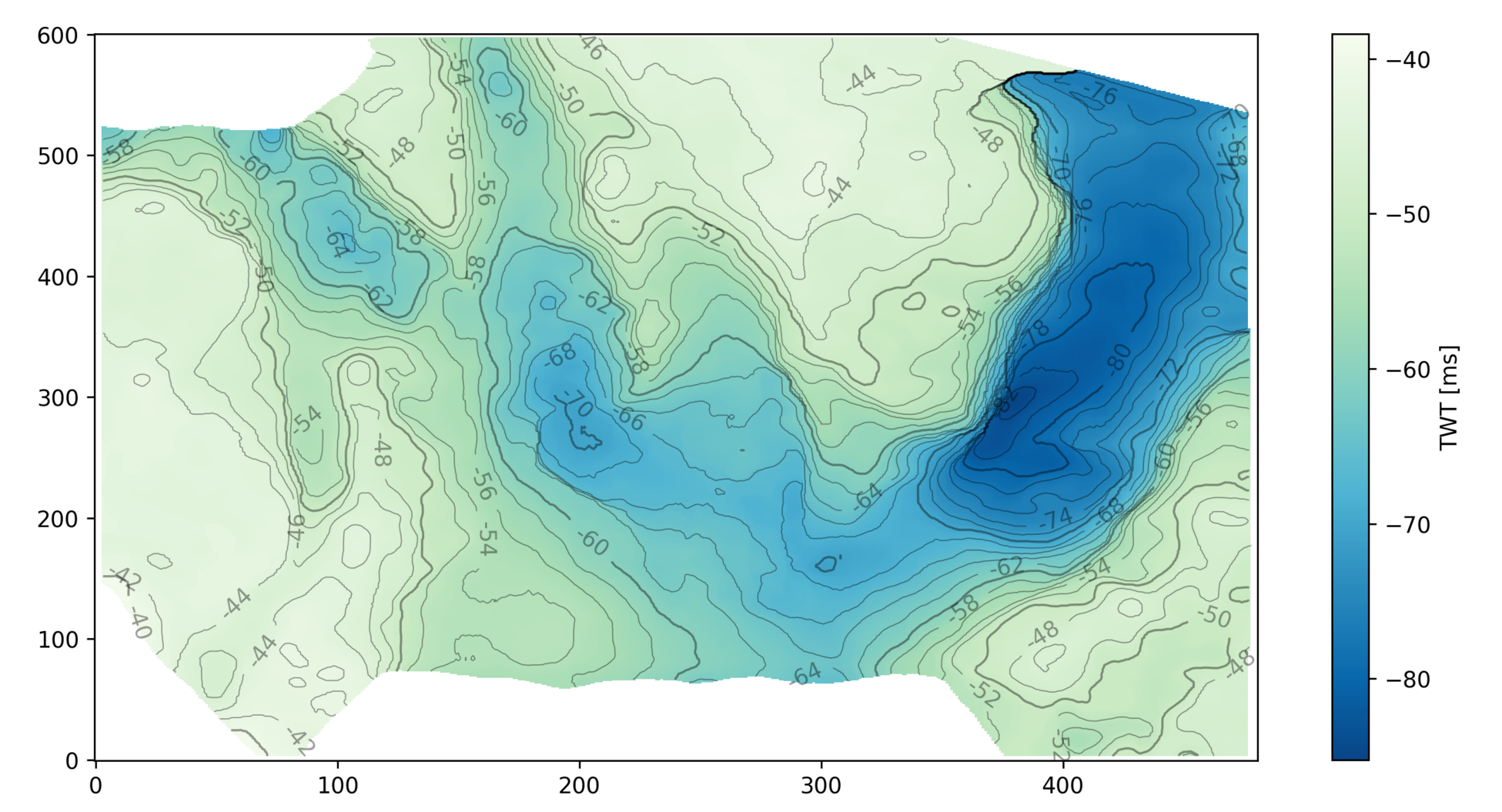

Localization accuracy on the order of 5–10 m on the large majority of gun positions was eventually achieved by the coordinated use of several technologies, including espionage, cartography, aerial reconnaissance photography, and the new counter-measures of flash spotting and sound ranging.

Flash spotting was more or less just what it sounds like: teams of spotters recording the azimuth of artillery flashes, then triangulating artillery positions from multiple observations. The only real trick was in reporting the timing of flashes to help establish that the flashes came from the same gun.

Sound ranging, on the other hand, is a tad more complicated. It seems that Lawrence Bragg was the first to realize that the low frequency sound of artillery fire — which he said lifted him off the privy seat in the outhouse at his lodgings — might be a useful signal. However, microphones were not up to the task of detecting such low frequencies. Furthermore, the signal was masked by the (audible) sonic boom of the shell, as well as the shockwaves of passing shells.

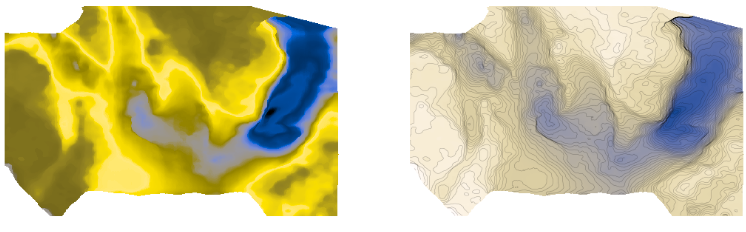

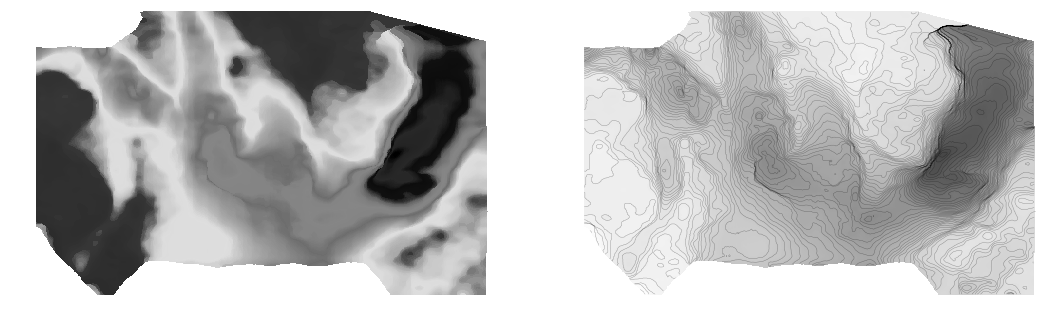

Elsewhere in Belgium, William Tucker had another revelation. Lying inside a shack with holes in its walls, he realized that the 20 Hz pressure wave from the gun created tiny puffs of air through the holes. So he looked for a way to detect this pulse, and came up with a heated platinum wire in a rum jar. The filament's resistance dropped when cooled by the wavefront's arrival through an aperture. The wire was unaffected by the high-frequency shell wave. Later, moving-coil 'microphones' (geophones, essentially) were also used, as well as calibration for wind and temperature. The receivers were coupled with a 5-channel string galvanometer, invented by French engineers, to record traces onto 35-mm film bearing timing marks:

McNaughton continued to develop these technologies through the war, and by the end was successfully locating the large majority of enemy artillery locations, and was even able to specify the calibre of the guns and their probable intended targets. Erster Generalquartiermeister Erich Ludendorff commented at one point in the war:

“According to a captured English document the English have a well- developed system of sound-ranging which in theory corresponds to our own. Precautions are accordingly to be taken to camouflage the sound: e.g. registration when the wind is contrary, and when there is considerable artillery activity, many batteries firing at the same time, simultaneous firing from false positions, etc.”

An acoustic arsenal

The hot-wire artillery detector was not Tucker's only acoustic innovation. He also pioneered the use of acoustic mirrors for aircraft detection. Several of these were built around the UK's east coast, starting around 1915 — the three shown here are at Denge in Kent. They were rendered obselete by the invention of radar around the beginning of World War Two.

Acoustic and seismic devices are still used today in military and security applications, though they are rarely mentioned in applied geophysics textbooks. If you know about any interesting contemporary uses, tell us about it in the comments.

According to Crown Copyright terms, the image of McNaughton is out of copyright. The acoustic mirror image is by Paul Russon, licensed CC-BY-SA. The uncredited/unlicensed galvanometer trace is from the excellent Stop, hey, what's that sound article on the geographical imaginations blog; I assume it is out of copyright. The howitzer image is out of copyright.

This post on Target acquisition and counter battery is very informative and has lots of technical details, though most of it pertains to later technology. The Boom! Sounding out the enemy article on ScienceNews for Students is also very nice, with lots of images.

Except where noted, this content is licensed

Except where noted, this content is licensed