Canadian codeshow

/

Earlier this month we brought the world-famous geoscience hackathon to Calgary, tacking on a geocomputing bootcamp for good measure. Fourteen creative geoscientists came and honed their skills, leaving 4 varied projects in their wake. So varied in fact that this event had the most diversity of all the hackathons so far.

Thank you to Raquel Theodoro and Penny Colton for all the great photographs. You both did a great job of capturing what went on. Cheers!

Thank you as well to our generous and generally awesome sponsors. These events would not be possible without them.

Bootcamp

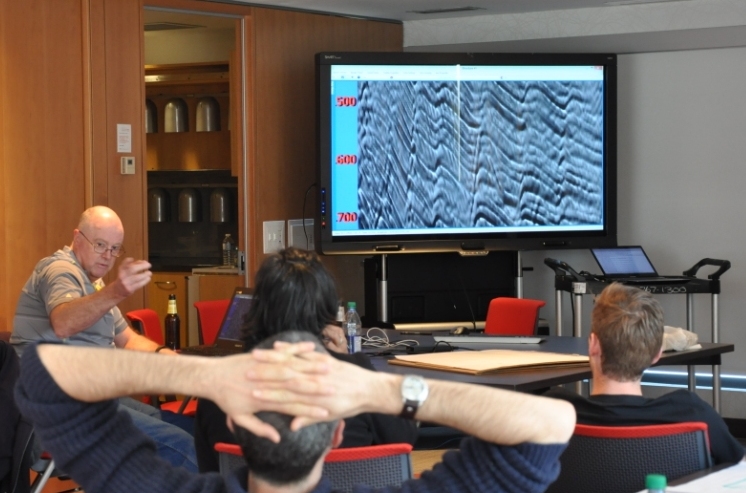

The bootcamp was a big experiment. We have taught beginner classes before, but this time we also invited beyond-novice programmers to come and learn together. Rather than making it a classroom experience, we were trying to make a friendly space where people could learn from us, from each other, or from books or the web. After some group discussion about hackathons and dream projects (captured here), we split into two groups: beginners and 'other'. The beginners got an introduction to scientific Python; the others got a web application masterclass from Ben Bougher (UBC master's student and Agile code ninja). During the day, we harvested a pretty awesome list of potential future hackathon projects.

Hackathon

The hackathon itself yielded four very cool projects, fuelled this time not by tacos but by bánh mì and pizza (separately):

- Hacking data inside Seismic Terrain Explorer, by Steve Lynch of Calgary

- Launching GLauncher, a crowdfunding tool, by Raquel Theodoro of Rio de Janeiro and Ben Bougher of UBC

- Hacksaw: A quick-look for LAS files in a web app, by Gord Foo, Gerry Cao, Yongxin Liu of Calgary, plus me

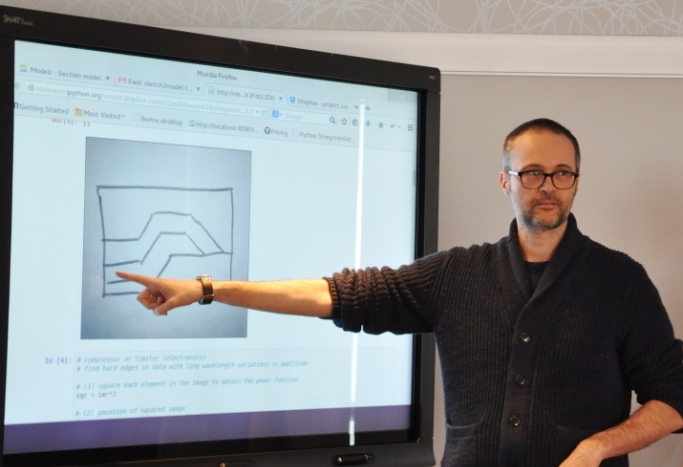

- Turning sketches in to models, by Evan Saltman, Elwyn Galloway, and Matteo Niccoli of Calgary, and Ben again

Sketch2model was remarkable for a few reasons: it was the first hackathon for most of the team, they had not worked together before, Elwyn dreamt up the idea more or less on the spot, and they seemed to nail it with a minimum of fuss. Matteo quietly got on with the image processing magic, Evan and Ben modified modelr.io to do the modeling bit, and Elwyn orchestrated the project, providing a large number of example sketches to keep the others from getting too cocky.

We'll be doing it all again in New Orleans this fall. Get it in your calendar now!

Except where noted, this content is licensed

Except where noted, this content is licensed