Looking forward to Copenhagen

/We're in Copenhagen for the Subsurface Bootcamp and Hackathon, which start today, and the EAGE Annual Conference and Exhibition, which starts next week. Walking around the city yesterday, basking in warm sunshine and surrounded by sun-giddy Scandinavians, it became clear that Copenhagen is a pretty special place, where northern Europe and southern Europe seem to have equal influence.

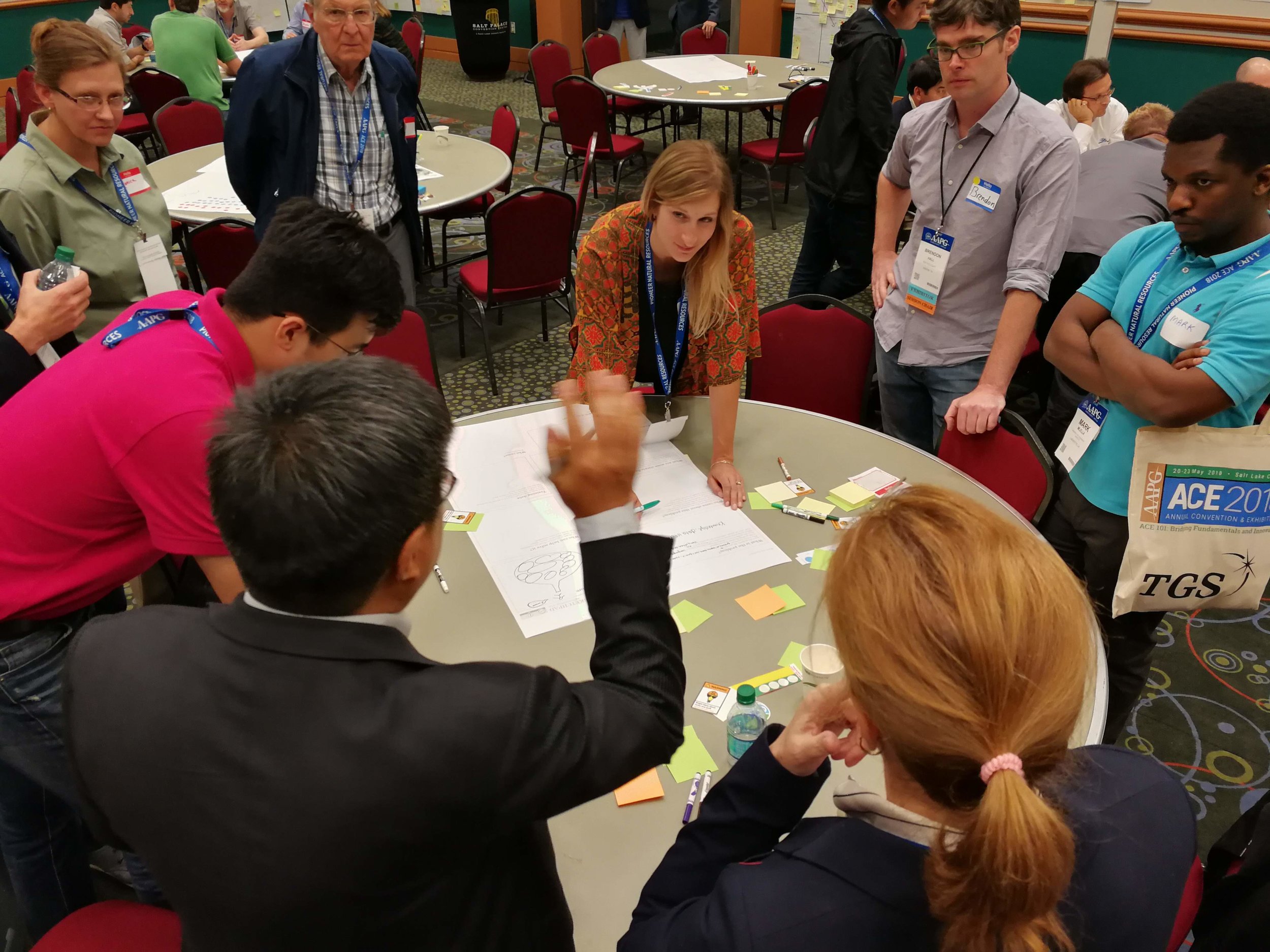

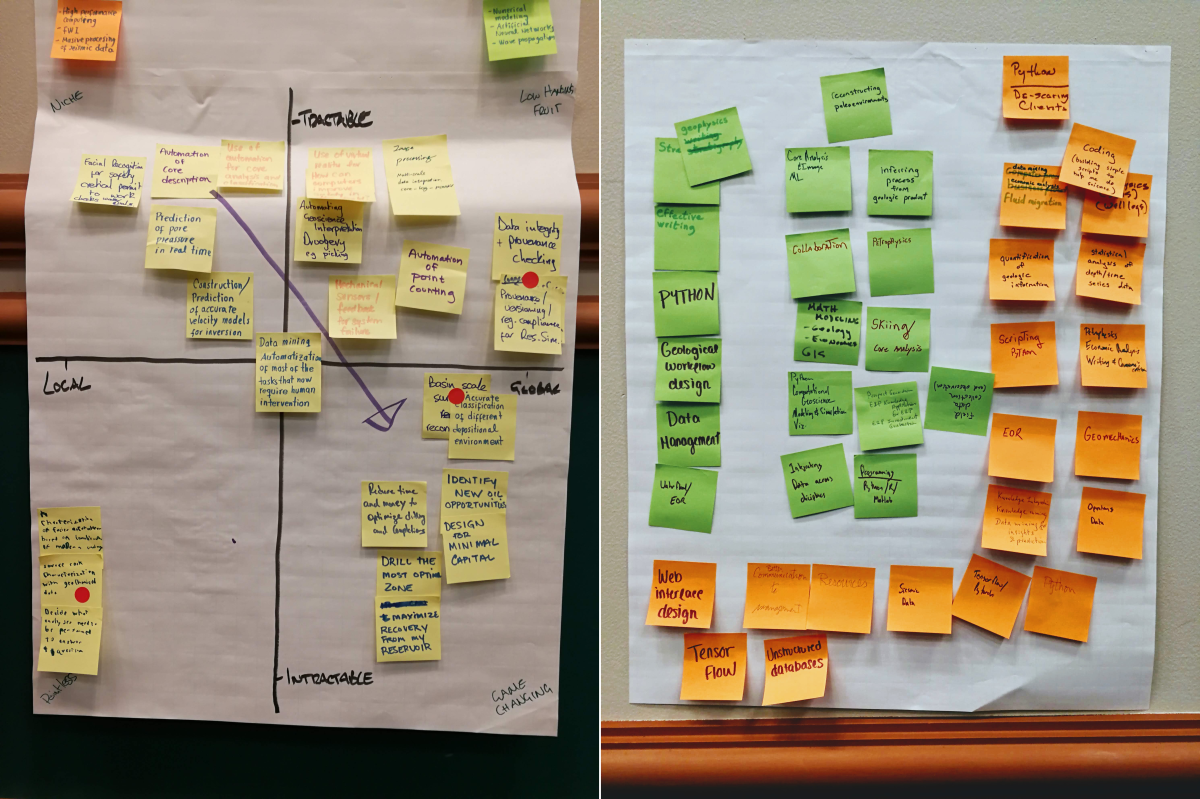

The event this weekend promises to be the biggest hackathon yet. It's our 10th, so I think we have the format figured out. But it's only the third in Europe, the theme — Visualization and interaction — is new for us, and most of the participants are new to hackathons so there's still the thrill of the unknown!

Many thanks to our sponsors for helping to make this latest event happen! Support these organizations: they know how to accelerate innovation in our industry.

New events for UK

By the way, we just announced two new hackathons, one in London and one in Aberdeen, for the autumn. They are happening just before PETEX, the PESGB petroleum conference; find out more here. You can skill up for these events at some new courses, also just announced. The UK Oil and Gas Authority is offering our Intro to Geocomputing and Machine Learning class for free — apply here for a place. The courses are oversubscribed, so be sure to tell the OGA why you should get a place!

Code Show

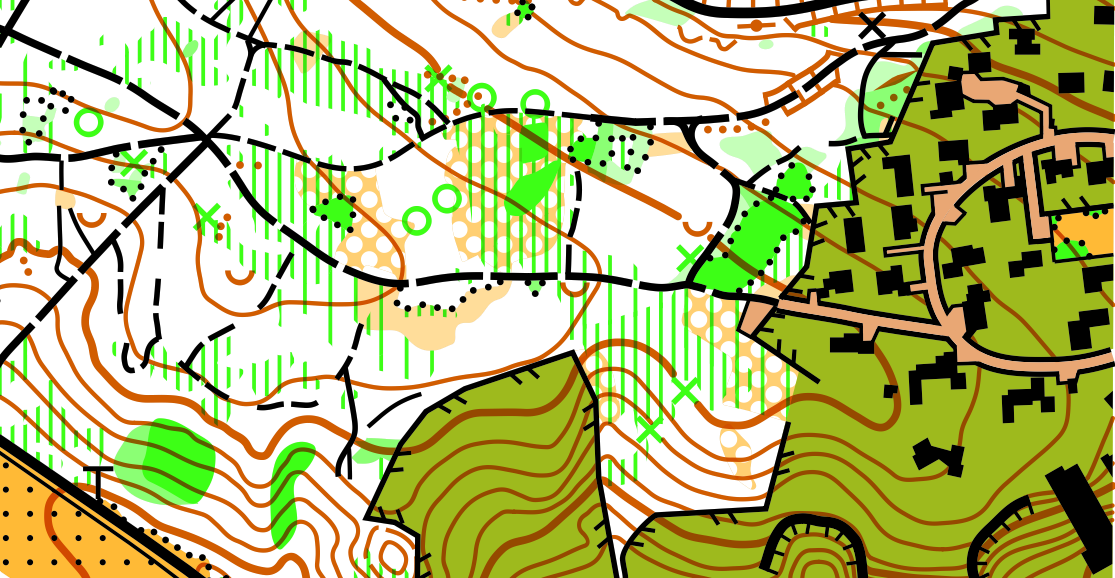

There is a lot of other stuff happening at the EAGE exhibition this year — the HPC area, a new start-up area, and a digital transformation area which I hope is as bold as it sounds. Here's the complete schedule and some highlights:

- WS02 Data Integration in Geoscience - Perspectives for Computational Methods, although it only contains 4 talks so I'm not sure if that means it will be short, or contain a lot of discussion (which would be cool).

- Seismic Interpretation I - Automation through AI, Machine Learning, Deep Learning, with an accompanying poster session. Evan reported on this session last year.

- Geothermal Solutions I (Dedicated Session) and Geothermal Solutions II — we always enjoy geothermal sessions. And geothermal is hot right now (heh, no but seriously, it is).

- Computational Geoscience and Data/information Management, including the talk Digitalization in subsurface learning, which sounds interesting but apparently you can't read abstracts online so who knows.

There's lots of other stuff of course — EAGE has the most varied programme of any subsurface conference — but these are the sessions I'd be at if I had time to go to any sessions this year. But I won't because The hackathon is not all that's happening! Next week, starting on Tuesday, we're conducting a new experiment with the Code Show. In partnership with EAGE and Total, this is our attempt to bring some of the hackathon experience to everyone at EAGE. We'll be showing people the projects from the hackathon, talking to them about programming, and helping them get started on their own coding adventure. So if you're at EAGE, swing by Booth #1830 and say Hi.

Except where noted, this content is licensed

Except where noted, this content is licensed