Not getting hacked

/The end of the year is a great time to look around at your life and sort stuff out. One of the things you almost certainly need to sort out is your online security. Because if you haven't been hacked already (you probably have), you're just about to be.

Just look at some recent stories from the world of data security:

- Yesterday, it emerged that Uber concealed a hack that exposed data of 57 million users

- Last month, we learned that when Yahoo said 1 billion accounts had been compromised in August 2013... it was wrong. It was 3 billion. In other words: all of their user accounts.

- On 29 July, hackers stole 143 million account details, seriously compromising hundreds of thousands of people.

There are plenty of others; Wired has been keeping track of them — read more here. Or check out Wikipedia's list.

Despite all this, I see hardly anyone using a password manager, and anecdotally I hear that hardly anyone uses two-factor authentication either. This tells me that at least 80% of smart people, inlcuding lots of my friends and relatives, are in daily peril. Oh no!

After reading this post, I hope you do two things:

- Start using a password manager. If you only do one thing, do this.

- Turn on two-factor authentication for your most vulnerable accounts.

Start using a password manager

Please, right now, download and install LastPass on every device and in every browser you use. It's awesome:

- It stores all your passwords! This way, they can all be different, and each one can be highly secure.

- It generates secure, random passwords for new accounts you create.

- It scores you on the security level of your passwords, and lets you easily change insecure ones.

- The free version is awesome, and the premium version is only $2/month.

There are other password managers, of course, but I've used this one for years and it's excellent. Once you're set up, you can start changing passwords that are insecure, or re-used on multiple sites... or which are at Uber, Yahoo, or Equifax.

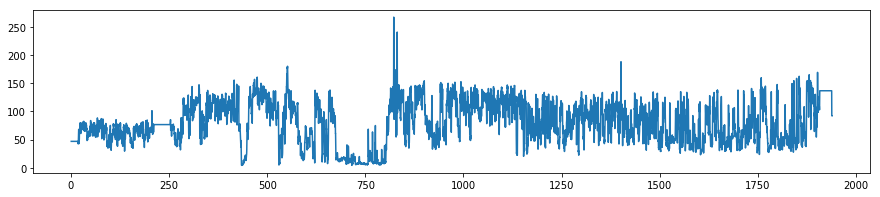

One surprise from using LastPass is being able to count the number of accounts I have created around the web over the years. I have 473 accounts stored in LastPass! That's 473 places to get hacked... how many places are you exposed?

The one catch: you need a bulletproof key for your password manager. Best advice: use a long pass-phrase instead.

Two-factor authentication

Sure, it's belt and braces — but you don't want your security trousers to fall down, right?

Er, anyway, the point is that even with a secure password, your password can still be stolen and your account compromised. But it's much, much harder if you use two-factor authentication, aka 2FA. This requires you to enter a code — from a hardware key or an app, or received via SMS — as well as your password. If you use an app, it introduces still another layer of security, because your phone should be locked.

I use Google's Authenticator app, and I like it. There's a little bit of hassle the first time you set it up, but after that it's plain sailing. I have 2FA turned on for all my 'high risk' accounts: Google, Twitter, Facebook, Apple, AWS, my credit card processor, my accounting software, my bank, my domain name provider, GitHub, and of course LastPass. Indeed, LastPass even lets me specify that logins must originate in Canada.

What else can you do?

There are some other easy things you can do to make yourself less hackable:

- Install updates on your phones, tablets, and other computers. Keep browsers and operating systems up to date.

- Be on high alert for phishing attempts. Don't follow links to sites like your bank or social media sites — type them into your browser if possible. Be very suspicious of anyone contacting you, especially banks.

- Don't use USB sticks. The cloud is much safer — I use Dropbox myself, it's awesome.

For more tips, check out this excellent article from Motherboard on not getting hacked.

Except where noted, this content is licensed

Except where noted, this content is licensed