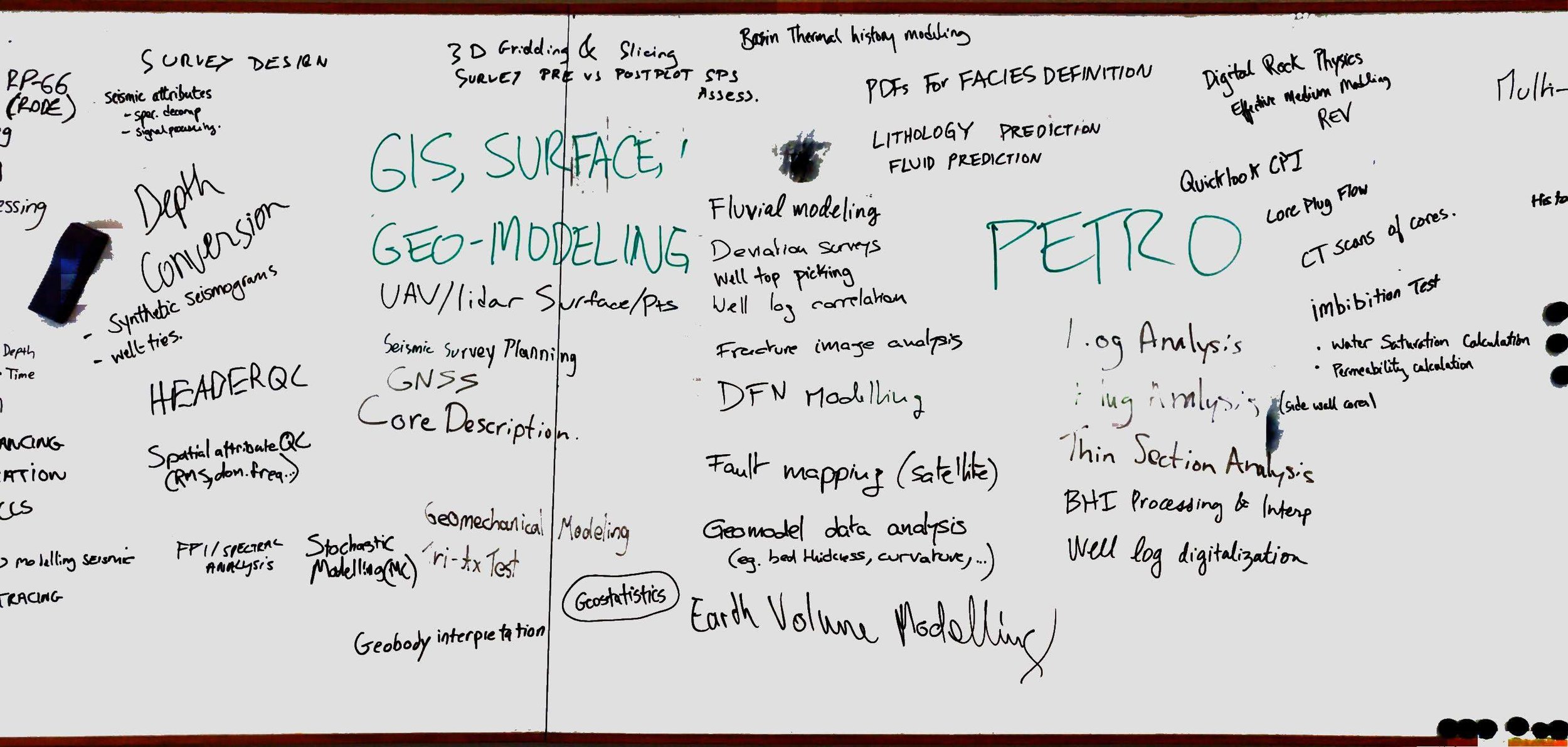

The Software Underground started as a mailing list in 2014 with maybe twenty participants, in the wake of the first geoscience hackathons. There are now more than 2,160 “rocks + computers” enthusiasts in the Underground, with about 20 currently joining every week. It’s the fastest growing digital subsurface water-cooler in the world! And the only one.

The beating heart of the Software Underground is its free, open Slack chat room. Accessible to anyone, it brings this amazing community right to your mobile device or computer desktop. When it comes to the digital subsurface, it has a far higher signal:noise ratio than LinkedIn, Twitter, or Facebook. Here are some of the topics under discussion this week:

The role of coding challenges in job interviews.

Handling null values in 2D grids such as airborne gamma-ray.

How to load an open seismic dataset into OpendTect.

A new series of tutorials for the GeoStats.jl Julia package.

Plenty of discussion about how to interpret negative oil prices!

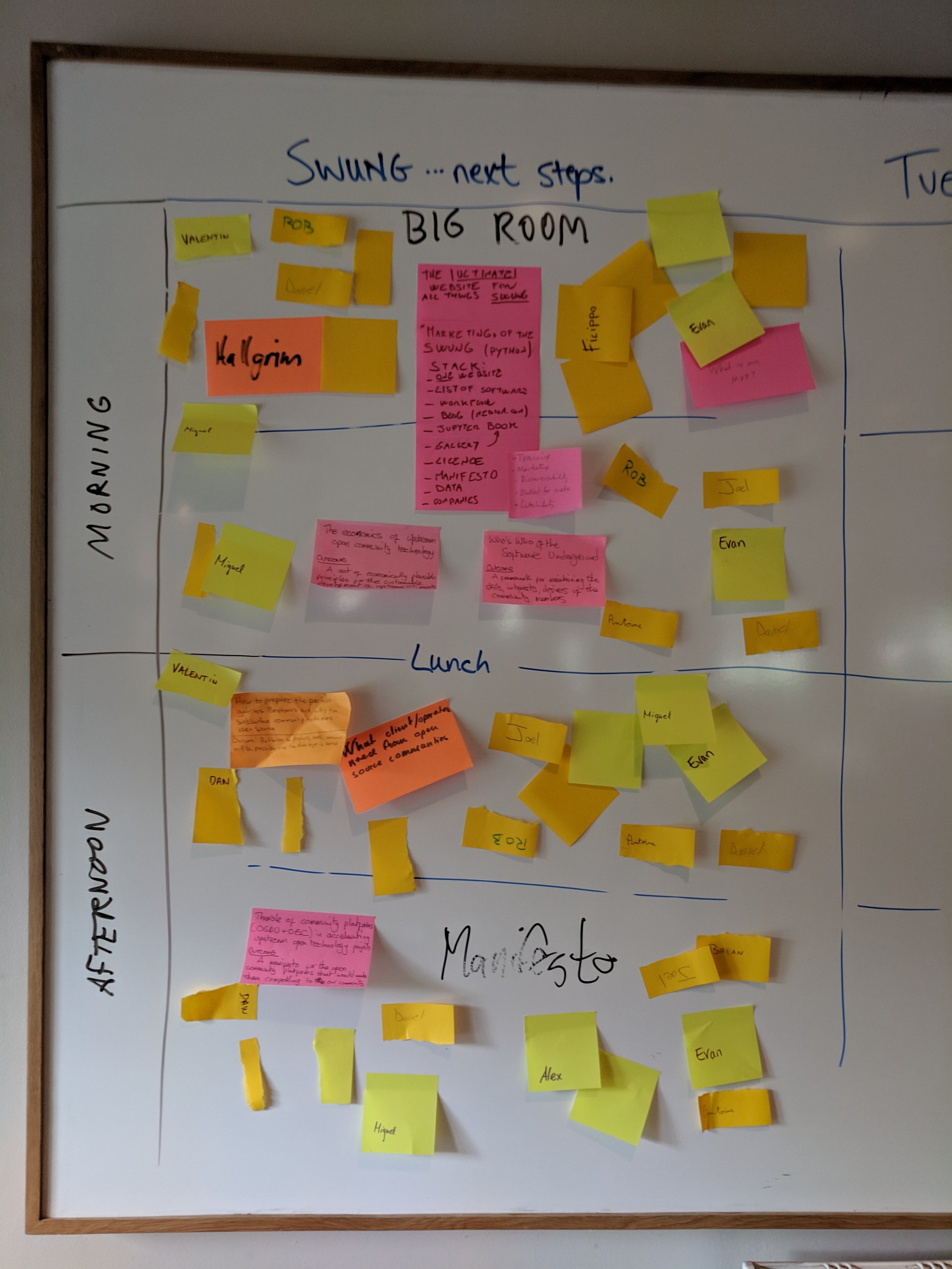

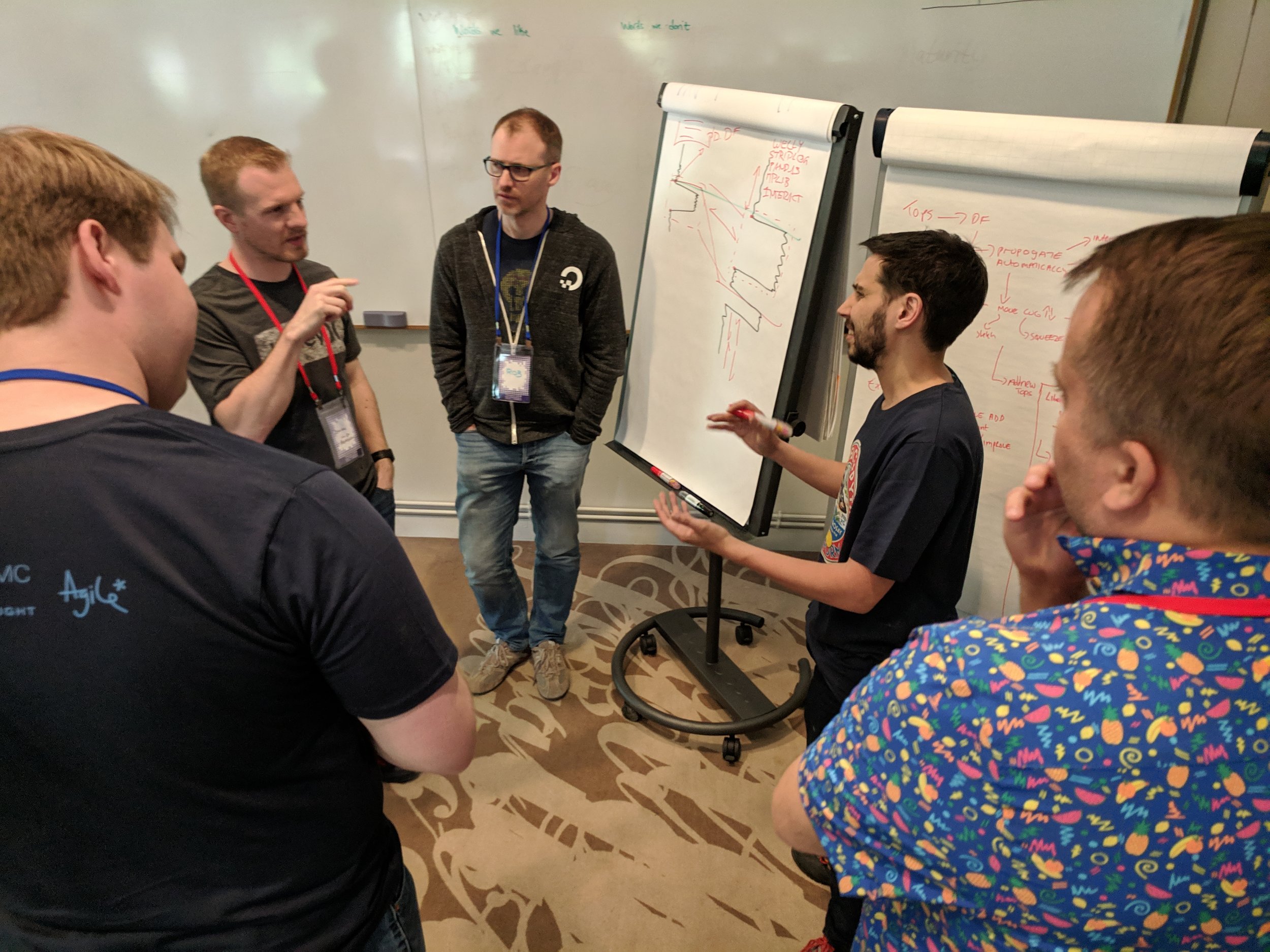

But the Software Underground — or Swung as its population affectionately call it — is more than just a chatroom. It organizes events. It compiles awesome documents. And it has great ambitions.

Evolution

To better explore those ambitions, the Underground is evolving.

Until now, it’s had a slightly murky existence, or non-existence, operating in mysterious ways and without visible means of support. When we tried to get a ‘non-profit’ booth at a conference last year, we couldn’t because the Software Underground isn’t just a non-profit, it’s a non-entity. It doesn’t legally exist.

Most of the time, this nonexistence is a luxury. No committees! No accounts! No lawyers!

But sometimes it’s a problem. Like when you want to accept donations in a transparent way. Or take sponsorship from a corporation. Or pay for an event venue. Or have some accountability for what goes on in the community. Or make a decision without waiting for Matt to read his email. Sometimes it matters.

A small band of supporters and evangelists have decided the time has come for the Software Underground to stand up and be counted! We’re going legal. We’re going to exist.

What will change?

As little as possible! And everything!

The Slack will remain the same. Free for everyone. The digital subsurface water-cooler (or public house, if you prefer).

We’re taking on our biggest event yet in June — a fully online conference called TRANSFORM 2020. Check it out.

Soon we will exist legally, as we move to incorporate in Canada as a non-profit. Later, we can think about how membership and administration will work. For now, there will be some ‘interim’ directors, whose sole job is to establish the terms of the organization’s existence and get it to its first Annual General Meeting, sometime in 2021.

The goal is to make new things possible, with a new kind of society.

And you’re invited.

Except where noted, this content is licensed

Except where noted, this content is licensed